The Goose Book

Have you ever been attacked by a goose?

What Is Goose?

Goose is a Rust load testing tool inspired by Locust. User behavior is defined with standard Rust code. Load tests are applications that have a dependency on the Goose library. Web requests are made with the Reqwest HTTP Client.

Advantages

Goose generates at least 11x as much traffic as Locust per-CPU-core, with even larger gains for more complex load tests (such as those using third-party libraries to scrape form content). While Locust requires you to manage a distributed load test simply to use multiple CPU cores on a single server, Goose leverages all available CPU cores with a single process, drastically simplifying the process for running larger load tests. Ongoing improvements to the codebase continue to bring new features and faster performance. Goose scales far better than Locust, efficiently using available resources to accomplish its goal. It also supports asynchronous processes enabling many more simultaneous processes to ramp up thousands of users from a single server, easily and consistently.

Goose’s distributed testing design is similar to Locust’s, in that it uses a one Manager to many Workers model. However, unlike Locust, you do not need to spin up a distributed load test to leverage all available cores on a single server, as a single Goose process will fully leverage all available cores. Goose distributed load tests scale near-perfectly as once started each Worker performs its load test without any direction from the Manager, and the Manager simply collects statistics from all the Workers for final reporting. In other words, one Manager controlling eight Workers on a single 8-CPU-core server generates the same amount of load as a single standalone Goose process independently leveraging all eight cores.

Goose has a number of unique debugging and logging mechanisms not found in other load testing tools, simplifying the writing of load tests and the analysis of results. Goose also provides more comprehensive metrics with multiple simple views into the data, and makes it easy to confirm that the load test is doing what you expect it to as you scale it up or down. It exposes the algorithms used to allocate scenarios and contained transactions, giving more granular control over the order and consistency of operations, important for easily repeatable testing.

What's Missing

At this time, the biggest missing feature of Goose is a UI for controlling and monitoring load tests, but this is a work in progress. A recently completed first step toward this goal was the addition of an optional HTML report generated at the end of a load test.

Brought To You By

Goose development is sponsored by Tag1 Consulting, led by Tag1's CEO, Jeremy Andrews, along with many community contributions. Tag1 is a member of the Rust Foundation.

Additional Documentation

Requirements

-

In order to write load tests, you must first install Rust.

-

Goose load tests are managed with Cargo, the Rust package manager.

Goose requires a minimum rustc version of 1.70.0 or later.

Glossary

Controller

An interface that allows real-time control of a running Goose load test. Goose provides both Telnet and WebSocket controllers for dynamically adjusting test parameters like user count, hatch rate, and runtime during execution.

Coordinated Omission

A phenomenon that occurs in load testing when the measurement system inadvertently excludes the results of requests that were affected by server slowdowns, leading to misleadingly optimistic performance metrics. Goose includes Coordinated Omission Mitigation functionality to detect and correct for this.

Gaggle

Goose's distributed load testing functionality that allows running coordinated load tests across multiple machines. A Gaggle consists of one Manager and multiple Workers. Note: Gaggle support was temporarily removed in Goose 0.17.0.

GooseAttack

A load test defined by one or more Scenarios with one or more Transactions.

GooseConfiguration

A structure that defines all configuration options for a Goose load test, including user count, hatch rate, runtime, host, and various other parameters. Can be set via command line arguments, configuration files, or programmatically.

GooseError

A helper that defines all possible errors returned by Goose. A Transaction returns a TransactionResult, which is either Ok(()) or Err(GooseError).

GooseUser

A thread that repeatedly runs a single scenario for the duration of the load test. For example, when Goose starts, you may use the --users command line option to configure how many GooseUser threads are started. This is not intended to be a 1:1 correlation between GooseUsers and real website users.

Hatch Rate

The rate at which new GooseUsers are launched during the ramp-up phase of a load test, typically specified as users per second.

Request

A single request based around HTTP verbs.

Scenario

A scenario is a collection of transactions (aka steps) a user would undertake to achieve a specific user journey.

Test Plan

A flexible approach to scheduling load test phases, allowing you to define complex load patterns like gradual ramp-up, sustained load periods, spike testing, and graceful ramp-down. Test plans use the format users,duration;users,duration to specify multiple phases.

Throttle

A mechanism to limit the request rate of individual GooseUsers, helping simulate more realistic user behavior by introducing delays between requests rather than sending requests as fast as possible.

Transaction

A transaction is a collection of one or more requests and any desired validation. For example, this may include loading the front page and all contained static assets, logging into the website, or adding one or more items to a shopping chart. Transactions typically include assertions or expectation validation.

TransactionResult

A Result returned by Transaction functions. A transaction can return Ok(()) on success, or Err(GooseError) on failure.

Weight

A value that controls the frequency with which a Transaction or Scenario runs, relative to the other transactions in the same scenario, or scenarios in the same load test. For example, if one transaction has a weight of 3 and another transaction in the same scenario has a weight of 1, the first transaction will run 3 times as often as the second.

Getting Started

This first chapter of the Goose Book provides a high-level overview of writing and running Goose load tests. If you're new to Goose, this is the place to start.

The Importance Of Load Testing

Load testing can help prevent website outages, stress test code changes, and identify bottlenecks. It can also quickly perform functional regression testing. The ability to run the same test repeatedly gives critical insight into the impact of changes to the code and/or systems.

When to Use Goose

Goose is particularly well-suited for:

- Complex User Workflows: Testing multi-step processes like checkout flows, user registration, or content management workflows

- API Load Testing: Validating REST APIs, GraphQL endpoints, or microservice interactions under load

- Performance Regression Testing: Integrating into CI/CD pipelines to catch performance regressions before deployment

- Capacity Planning: Understanding how your infrastructure scales and where bottlenecks occur

- Coordinated Omission Detection: Identifying when server slowdowns affect more users than simple metrics suggest

Goose vs Other Load Testing Tools

Unlike tools that focus purely on HTTP request volume, Goose excels at:

- Stateful Testing: Maintaining sessions, cookies, and authentication across requests

- Realistic Load Patterns: Simulating actual user behavior rather than just hammering endpoints

- Developer-Friendly: Written in Rust with type safety and excellent error handling

- Detailed Analysis: Advanced metrics that reveal hidden performance issues

- Flexibility: Custom logic, data-driven tests, and complex scenarios

Prerequisites

Before diving into Goose, you should have:

- Basic Rust Knowledge: Familiarity with Rust syntax, async/await, and error handling

- HTTP Understanding: Knowledge of HTTP methods, status codes, and web application architecture

- Testing Mindset: Understanding of what you want to test and what constitutes success

Don't worry if you're new to load testing - Goose's approach will guide you toward writing realistic and valuable tests.

Creating A Load Test

Cargo

Cargo is the Rust package manager. To create a new load test, use Cargo to create a new application (you can name your application anything, we've generically selected loadtest):

$ cargo new loadtest

Created binary (application) `loadtest` package

$ cd loadtest/

This creates a new directory named loadtest/ containing loadtest/Cargo.toml and loadtest/src/main.rs. Edit Cargo.toml and add Goose and Tokio under the dependencies heading:

[dependencies]

goose = "^0.18"

tokio = "^1"

At this point it's possible to compile all dependencies, though the resulting binary only displays "Hello, world!":

$ cargo run

Updating crates.io index

Downloaded goose v0.18.1

...

Compiling goose v0.18.1

Compiling loadtest v0.1.0 (/home/jandrews/devel/rust/loadtest)

Finished dev [unoptimized + debuginfo] target(s) in 52.97s

Running `target/debug/loadtest`

Hello, world!

Creating the load test

To create an actual load test, you first have to add the following boilerplate to the top of src/main.rs to make Goose's functionality available to your code:

use goose::prelude::*;Note: Using the above prelude automatically adds the following

usestatements necessary when writing a load test, so you don't need to manually add all of them:use crate::config::{GooseDefault, GooseDefaultType}; use crate::goose::{ GooseMethod, GooseRequest, GooseUser, Scenario, Transaction, TransactionError, TransactionFunction, TransactionResult, }; use crate::metrics::{GooseCoordinatedOmissionMitigation, GooseMetrics}; use crate::{scenario, transaction, GooseAttack, GooseError, GooseScheduler};

Then create a new load testing function. For our example we're simply going to load the front page of the website we're load-testing. Goose passes all load testing functions a mutable pointer to a GooseUser object, which is used to track metrics and make web requests. Thanks to the Reqwest library, the Goose client manages things like cookies, headers, and sessions for you. Load testing functions must be declared async, ensuring that your simulated users don't become CPU-locked.

In load test functions you typically do not set the host, and instead configure the host at run time, so you can easily run your load test against different environments without recompiling. Relative paths (not starting with a /) should be used.

The following loadtest_index function simply loads the front page of our web page:

use goose::prelude::*;

async fn loadtest_index(user: &mut GooseUser) -> TransactionResult {

let _goose_metrics = user.get("").await?;

Ok(())

}The function is declared async so that we don't block a CPU-core while loading web pages. All Goose load test functions are passed in a mutable reference to a GooseUser object, and return a TransactionResult which is either an empty Ok(()) on success, or a TransactionError on failure. We use the GooseUser object to make requests, in this case we make a GET request for the front page, specified with an empty path "". The .await frees up the CPU-core while we wait for the web page to respond, and the trailing ? unwraps the response, returning any unexpected errors that may be generated by this request.

When the GET request completes, Goose returns metrics which we store in the _goose_metrics variable. The variable is prefixed with an underscore (_) to tell the compiler we are intentionally not using the results. Finally, after making a single successful request, we return Ok(()) to let Goose know this transaction function completed successfully.

Now we have to tell Goose about our new transaction function. Edit the main() function, setting a return type and replacing the hello world text as follows:

#[tokio::main]

async fn main() -> Result<(), GooseError> {

GooseAttack::initialize()?

.register_scenario(scenario!("LoadtestTransactions")

.register_transaction(transaction!(loadtest_index))

)

.execute()

.await?;

Ok(())

}The #[tokio::main] at the beginning of this example is a Tokio macro necessary because Goose is an asynchronous library, allowing (and requiring) us to declare the main() function of our load test application as async.

If you're new to Rust, main()'s return type of Result<(), GooseError> may look strange. It essentially says that main will return nothing (()) on success, and will return a GooseError on failure. This is helpful as several of GooseAttack's methods can fail, returning an error. In our example, initialize() and execute() each may fail. The ? that follows the method's name tells our program to exit and return an error on failure, otherwise continue on. Note that the .execute() method is asynchronous, so it must be followed with .await, and as it can return an error it also has a ?. The final line, Ok(()) returns the empty result expected on success.

And that's it, you've created your first load test! Read on to see how to run it and what it does.

Validating Requests

Goose Eggs

Goose-eggs are helpful in writing Goose load tests.

To leverage Goose Eggs when writing your load test, include the crate in the dependency section of your `Cargo.toml.

[dependencies]

goose-eggs = "0.4"

For example, to use the Goose Eggs validation functions, bring the Validate structure and either the validate_page or the validate_and_load_static_assets function into scope:

use goose_eggs::{validate_and_load_static_assets, Validate};Now, it is simple to verify that we received a 200 HTTP response status code, and that the text Gander appeared somewhere on the page as expected:

let goose = user.get("/goose/").await?;

let validate = &Validate::builder()

.status(200)

.text("Gander")

.build();

validate_and_load_static_assets(user, goose, &validate).await?;Whether or not validation passed or failed will be visible in the Goose metrics when the load test finishes. You can enable the debug log to gain more insight into failures.

Read the goose-eggs documentation to learn about other helpful functions useful in writing load tests, as well as other validation helpers, such as headers, header values, the page title, and whether the request was redirected.

Running A Load Test

We will use Cargo to run our example load test application. It's best to get in the habit of setting the --release option whenever compiling or running load tests.

$ cargo run --release

Finished release [optimized] target(s) in 0.06s

Running `target/release/loadtest`

07:08:43 [INFO] Output verbosity level: INFO

07:08:43 [INFO] Logfile verbosity level: WARN

07:08:43 [INFO] users defaulted to number of CPUs = 10

Error: InvalidOption { option: "--host", value: "", detail: "A host must be defined via the --host option, the GooseAttack.set_default() function, or the Scenario.set_host() function (no host defined for LoadtestTransactions)." }

The load test fails with an error as it hasn't been told the host you want to load test.

So, let's try again, this time passing in the --host flag. We will also add the --report-file flag with a .html file extension, which will generate an HTML report, and --no-reset-metrics to preserve all information including the load test startup. The same information will also be printed to the command line (without graphs). After running for a few seconds, press ctrl-c one time to gracefully stop the load test:

% cargo run --release -- --host http://umami.ddev.site --report-file=report.html --no-reset-metrics

Finished release [optimized] target(s) in 0.06s

Running `target/release/loadtest --host 'http://umami.ddev.site' --report-file=report.html --no-reset-metrics`

08:53:48 [INFO] Output verbosity level: INFO

08:53:48 [INFO] Logfile verbosity level: WARN

08:53:48 [INFO] users defaulted to number of CPUs = 10

08:53:48 [INFO] no_reset_metrics = true

08:53:48 [INFO] report_file = report.html

08:53:48 [INFO] global host configured: http://umami.ddev.site

08:53:48 [INFO] allocating transactions and scenarios with RoundRobin scheduler

08:53:48 [INFO] initializing 10 user states...

08:53:48 [INFO] Telnet controller listening on: 0.0.0.0:5116

08:53:48 [INFO] WebSocket controller listening on: 0.0.0.0:5117

08:53:48 [INFO] entering GooseAttack phase: Increase

08:53:48 [INFO] [user 1]: launching user from LoadtestTransactions

08:53:49 [INFO] [user 2]: launching user from LoadtestTransactions

08:53:50 [INFO] [user 3]: launching user from LoadtestTransactions

08:53:51 [INFO] [user 4]: launching user from LoadtestTransactions

08:53:52 [INFO] [user 5]: launching user from LoadtestTransactions

08:53:53 [INFO] [user 6]: launching user from LoadtestTransactions

08:53:54 [INFO] [user 7]: launching user from LoadtestTransactions

08:53:55 [INFO] [user 8]: launching user from LoadtestTransactions

08:53:56 [INFO] [user 9]: launching user from LoadtestTransactions

08:53:57 [INFO] [user 10]: launching user from LoadtestTransactions

All 10 users hatched.

08:53:58 [INFO] entering GooseAttack phase: Maintain

^C08:54:25 [WARN] caught ctrl-c, stopping...

As of Goose 0.16.0, by default all INFO and higher level log messages are displayed on the console while the load test runs. You can disable these messages with the -q (--quiet) flag. Or, you can display low-level debug with the -v (--verbose) flag.

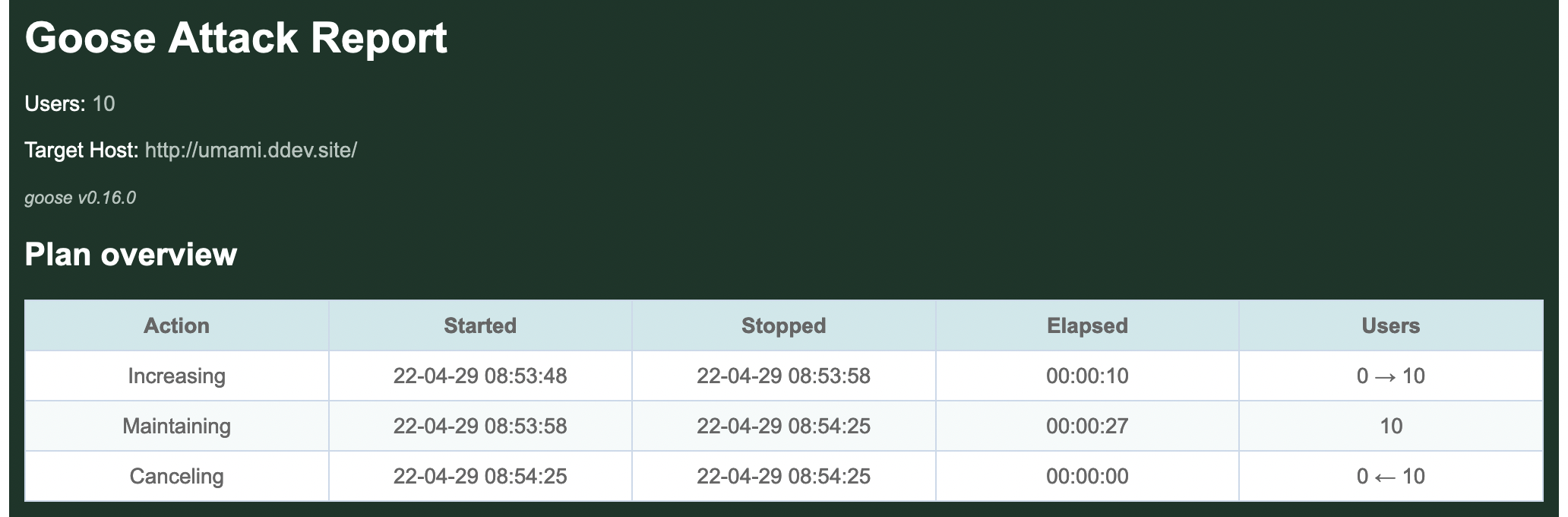

HTML report

When the load tests finishes shutting down, it will display some ASCII metrics on the CLI and an HTML report will be created in the local directory named report.html as was configured above. The graphs and tables found in the HTML report are what are demonstrated below:

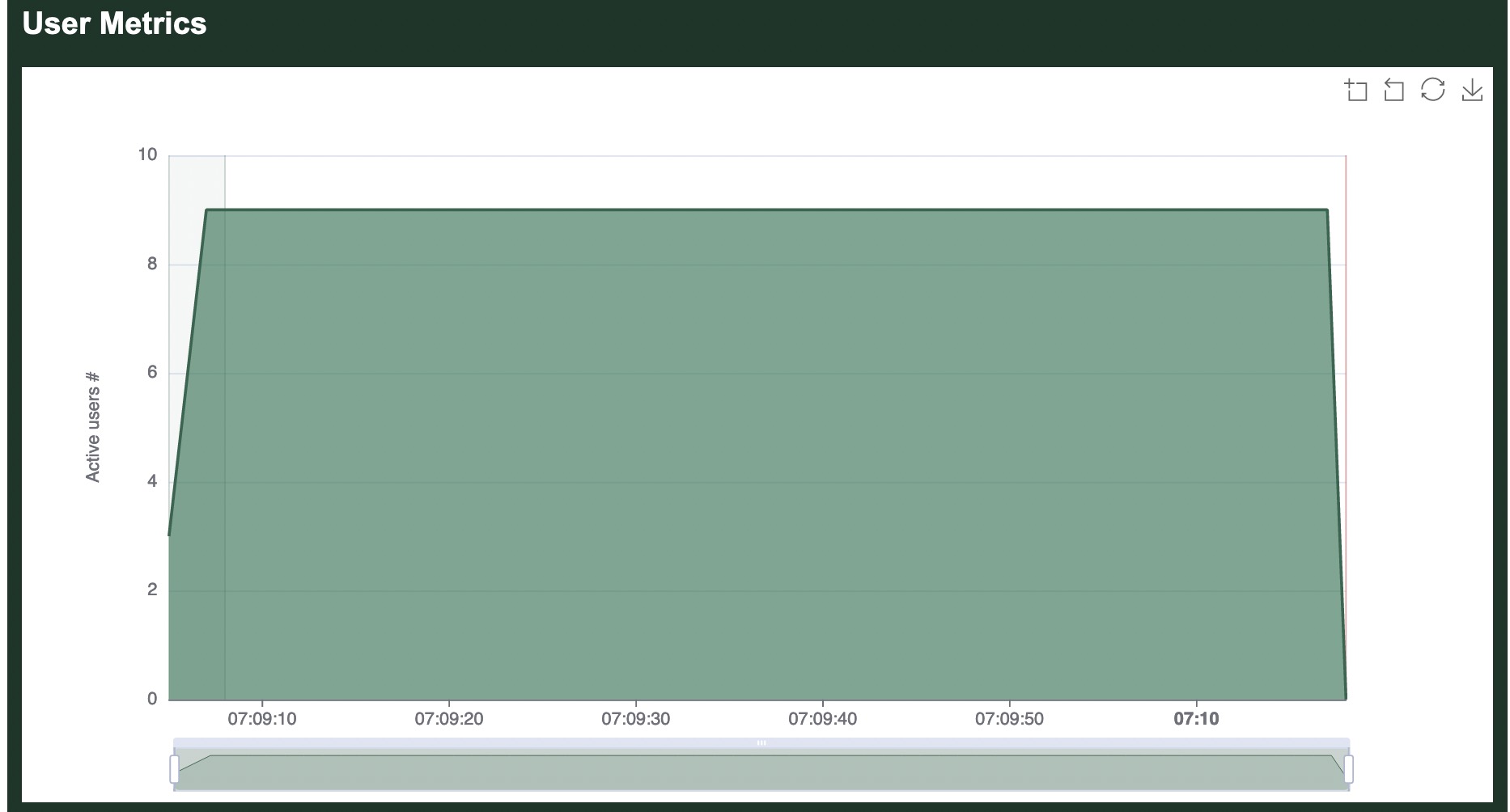

By default, Goose will hatch 1 GooseUser per second, up to the number of CPU cores available on the server used for load testing. In the above example, the loadtest was run from a laptop with 10 CPU cores, so it took 10 seconds to hatch all users.

By default, after all users are launched Goose will flush all metrics collected during the launching process (we used the --no-reset-metrics flag to disable this behavior) so the summary metrics are collected with all users running. If we'd not used --no-reset-metrics, before flushing the metrics they would have been displayed to the console so the data is not lost.

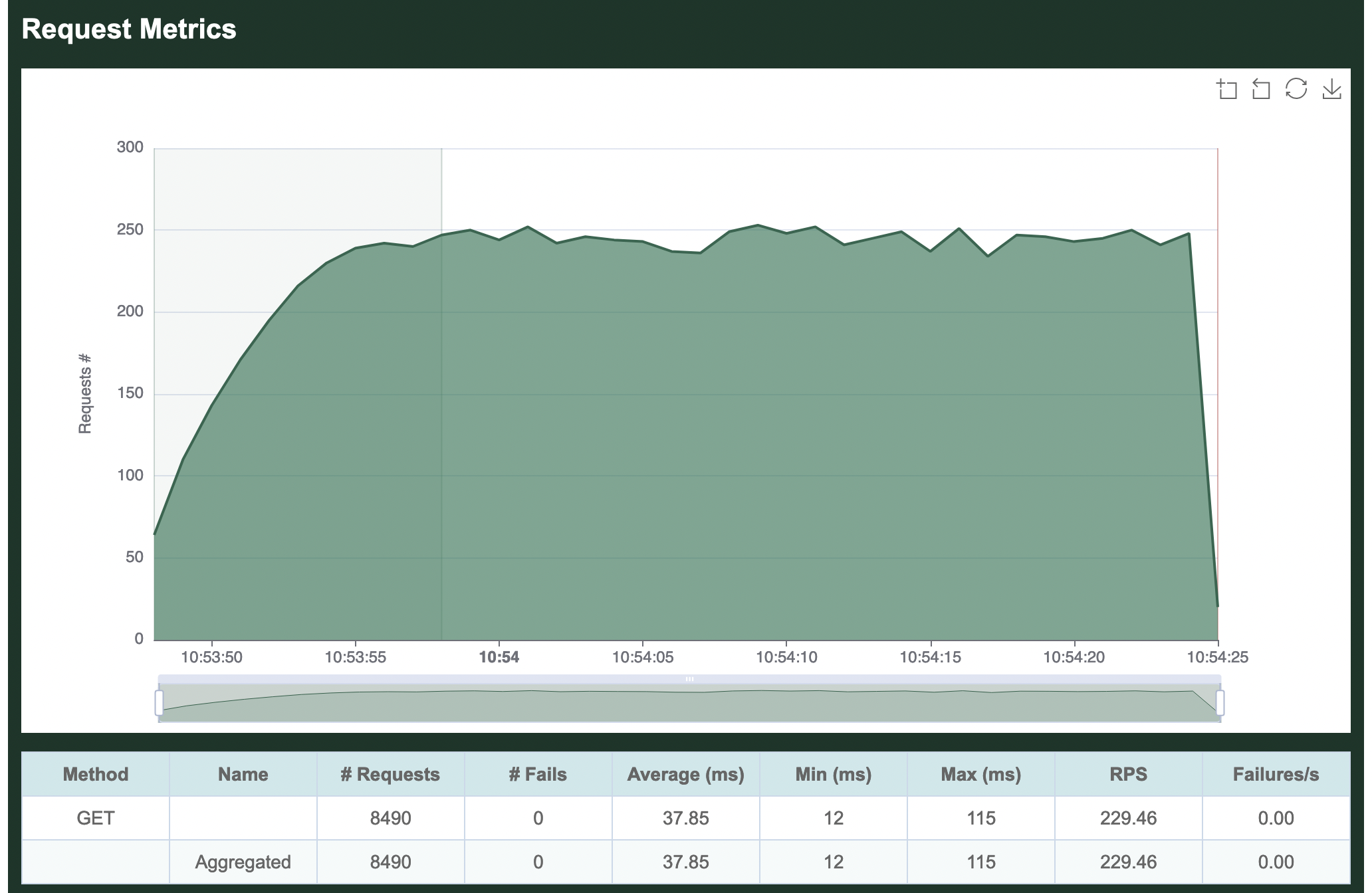

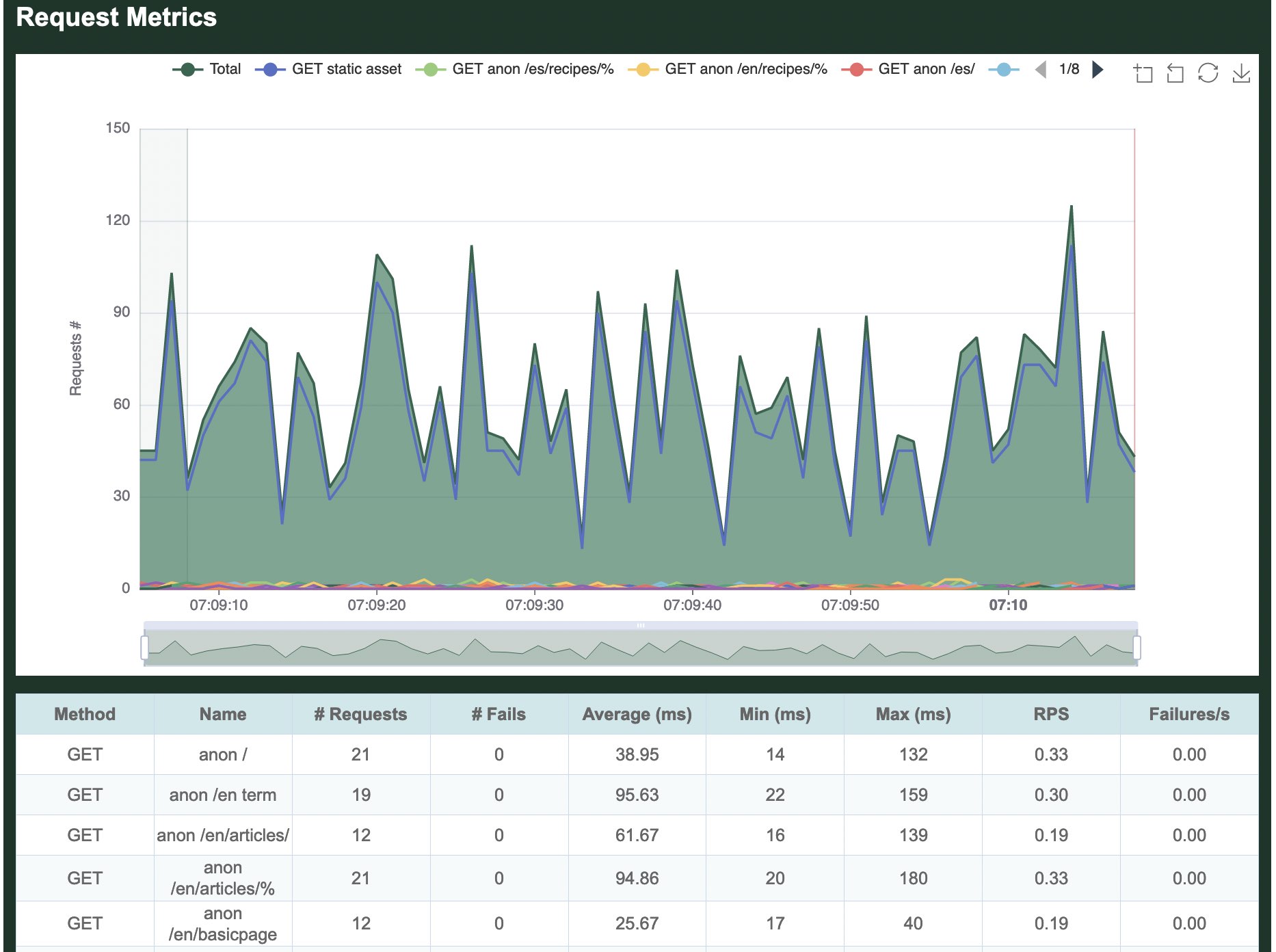

Request metrics

The per-request metrics are displayed first. Our single transaction makes a GET request for the empty "" path, so it shows up in the metrics as simply GET . The table in this section displays the total number of requests made (8,490), the average number of requests per second (229.46), and the average number of failed requests per second (0).

Additionally it shows the average time required to load a page (37.85 milliseconds), the minimum time to load a page (12 ms) and the maximum time to load a page (115 ms).

If our load test made multiple requests, the Aggregated line at the bottom of this section would show totals and averages of all requests together. Because we only make a single request, this row is identical to the per-request metrics.

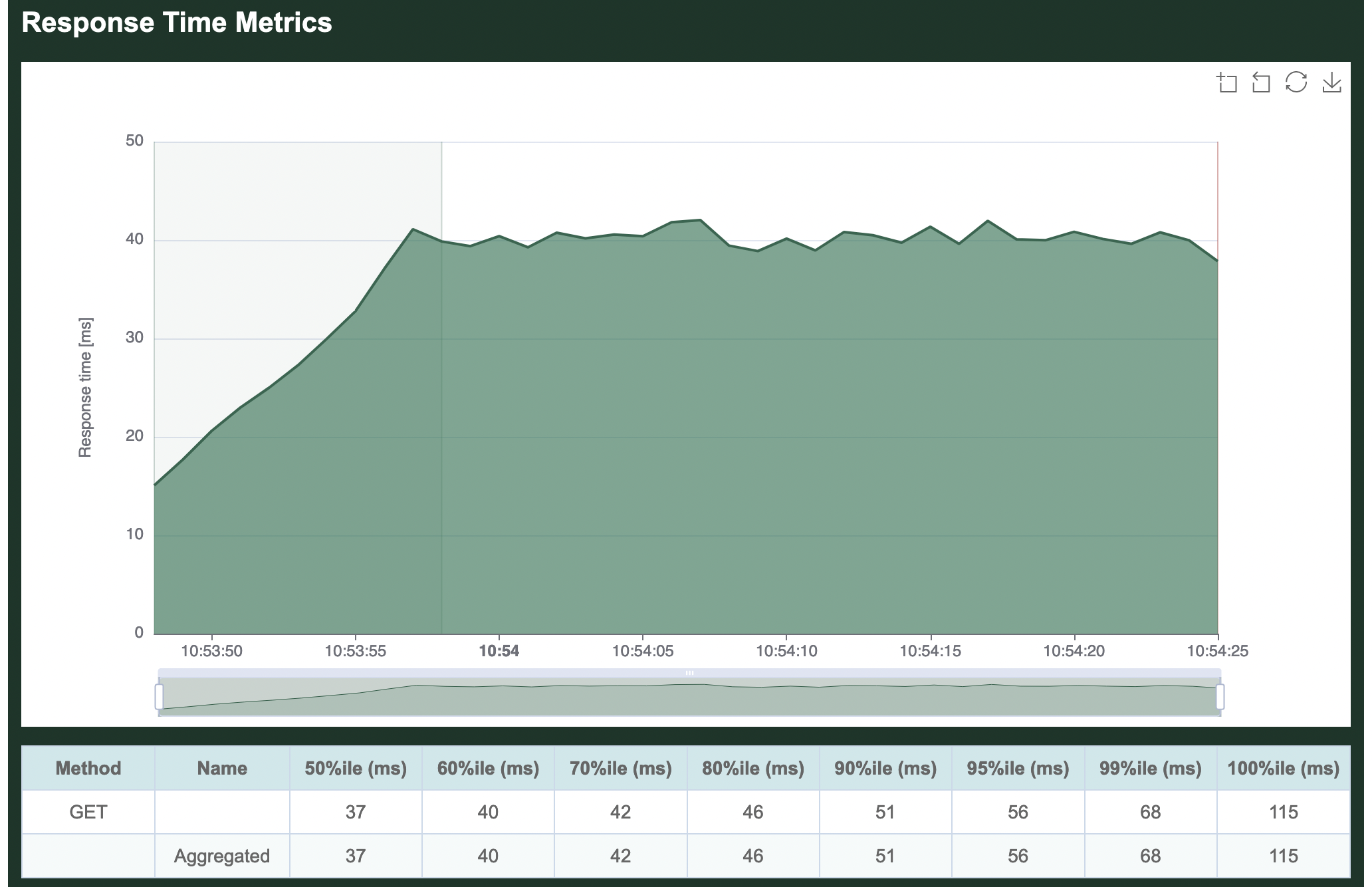

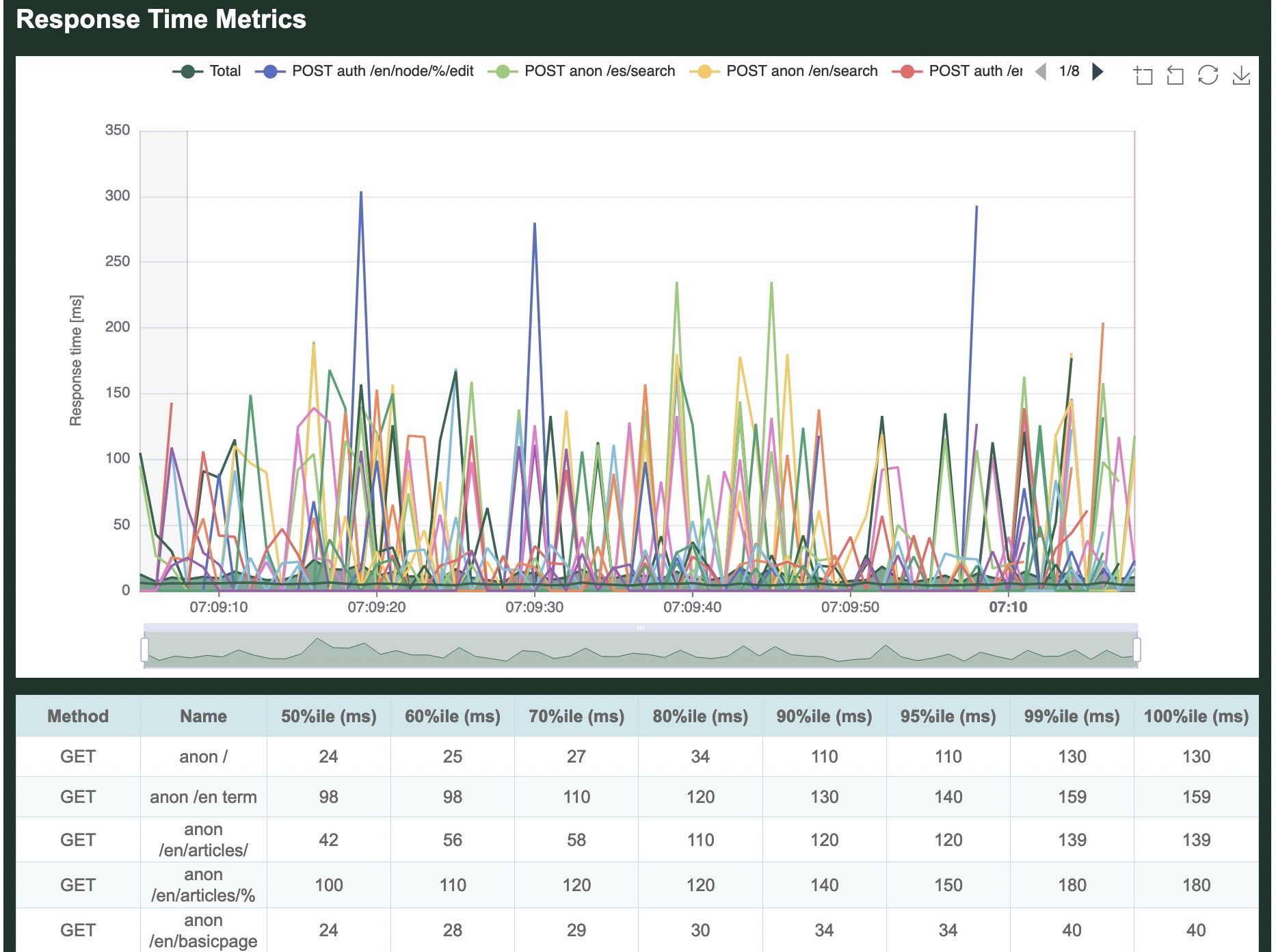

Response time metrics

The second section displays the average time required to load a page. The table in this section is showing the slowest page load time for a range of percentiles. In our example, in the 50% fastest page loads, the slowest page loaded in 37 ms. In the 70% fastest page loads, the slowest page loaded in 42 ms, etc. The graph, on the other hand, is displaying the average response time aggregated across all requests.

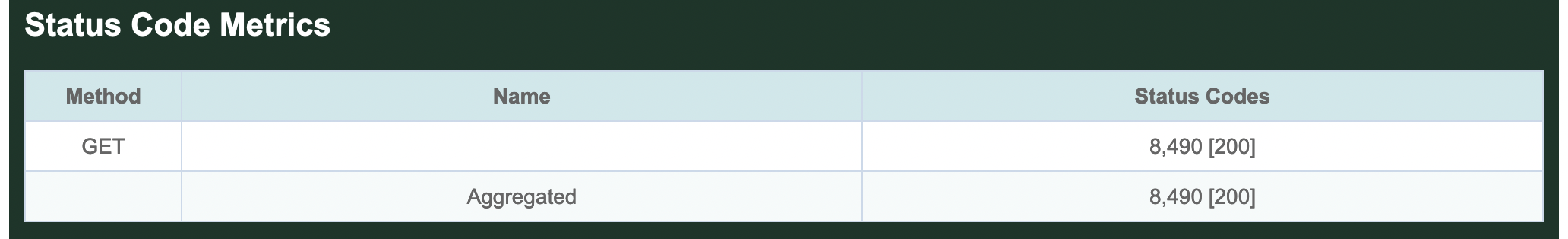

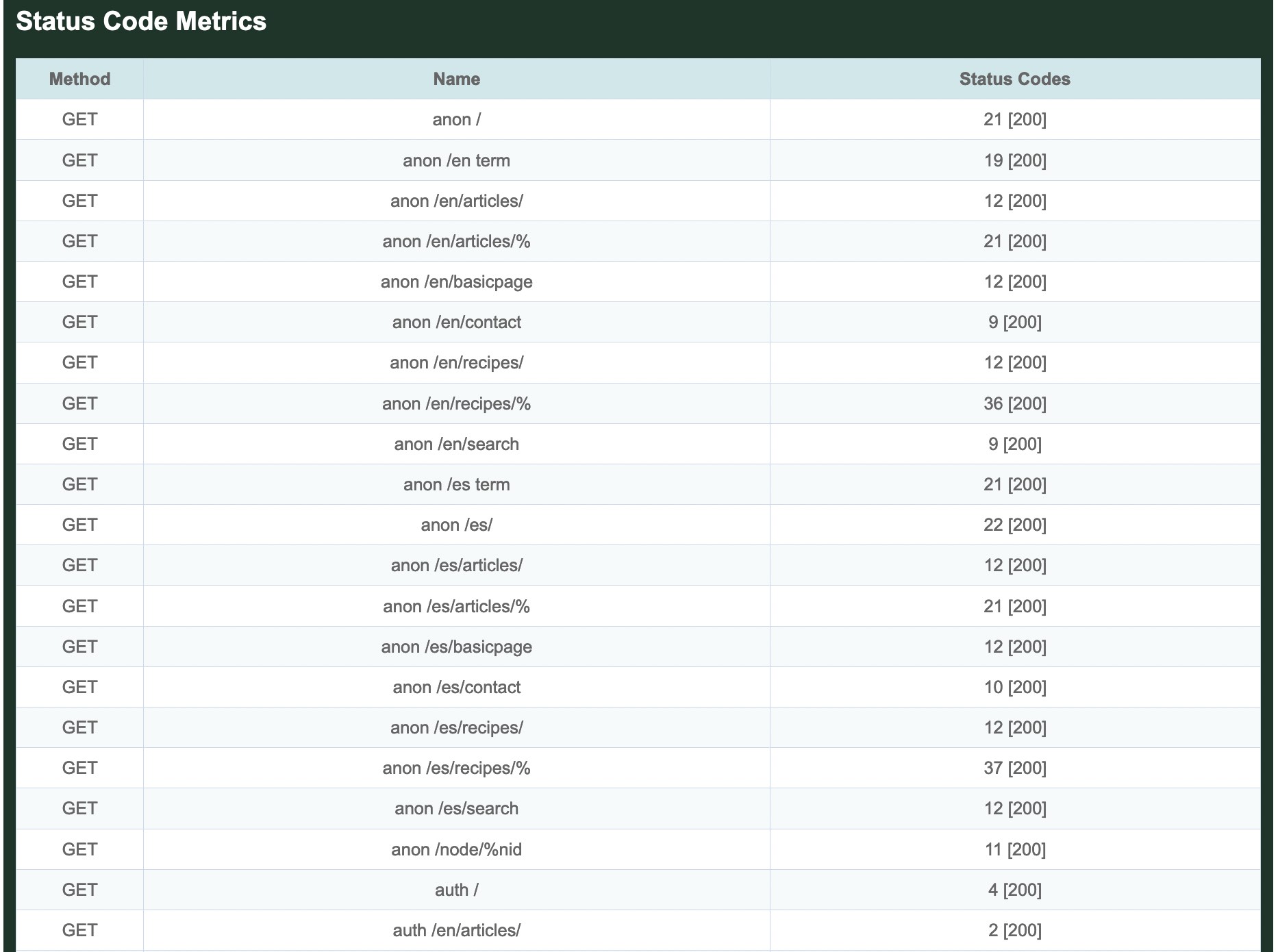

Status code metrics

The third section is a table showing all response codes received for each request. In this simple example, all 8,490 requests received a 200 OK response.

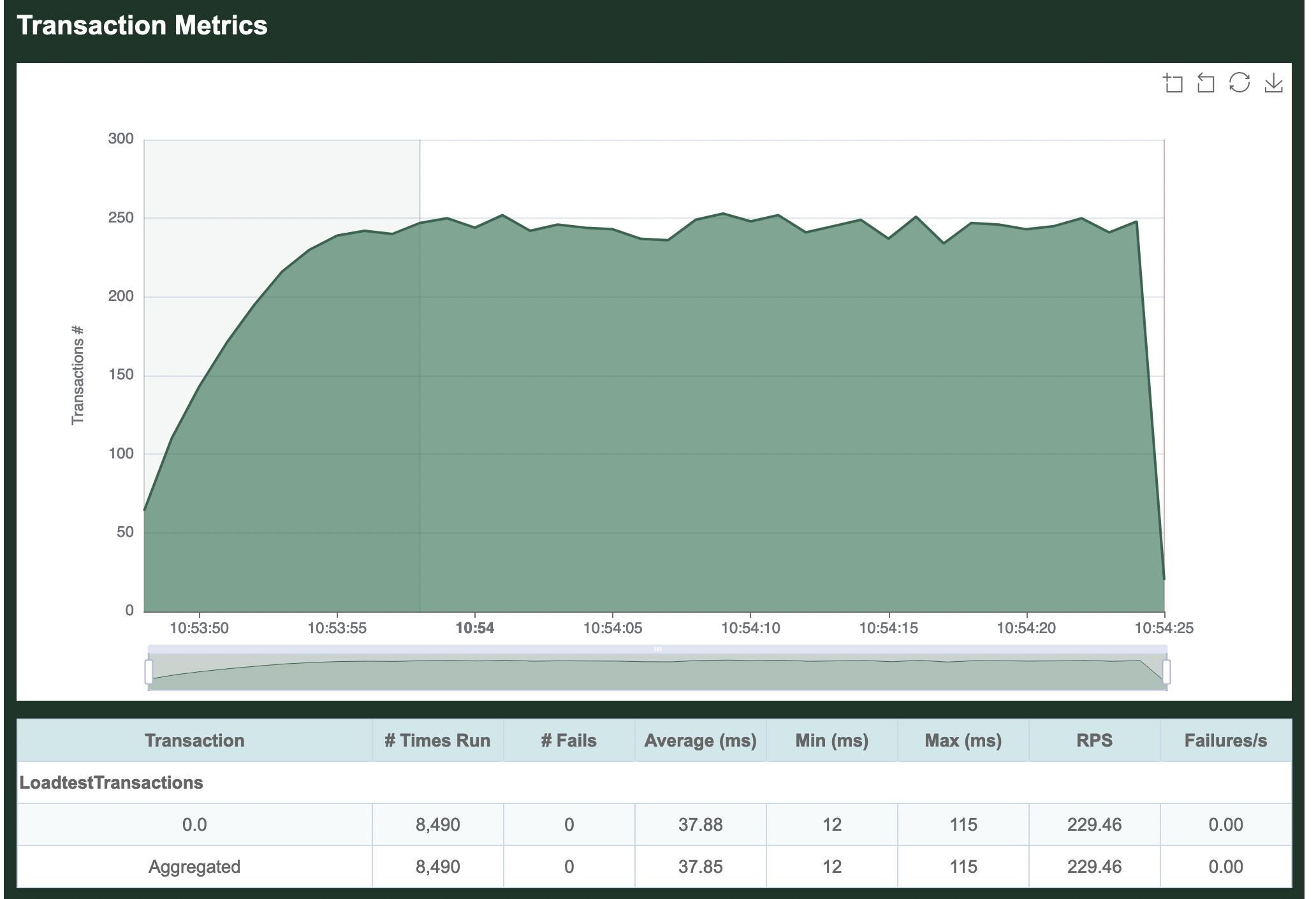

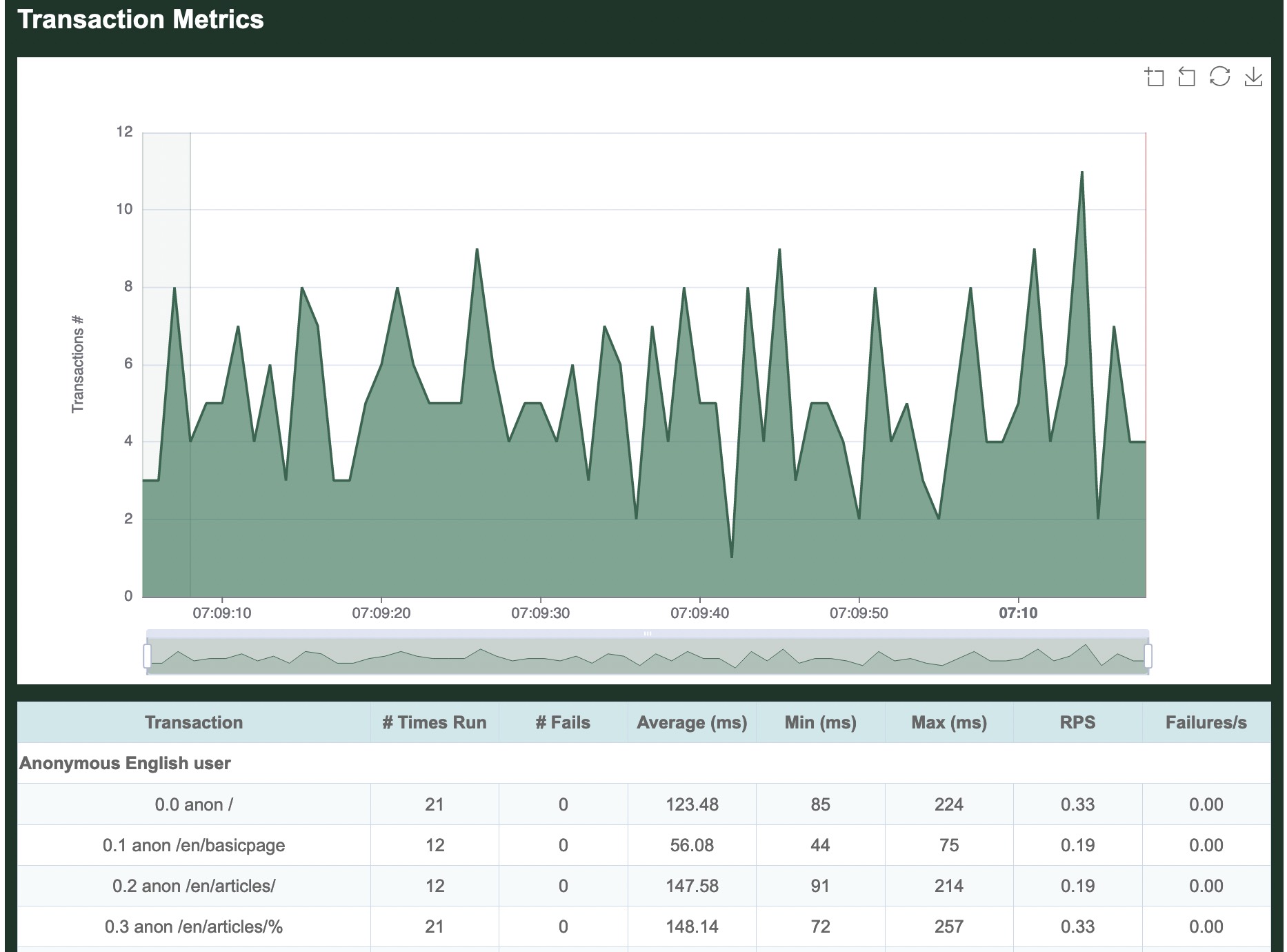

Transaction metrics

Next comes per-transaction metrics, starting with the name of our Scenario, LoadtestTransactions. Individual transactions in the Scenario are then listed in the order they are defined in our load test. We did not name our transaction, so it simply shows up as 0.0. All defined transactions will be listed here, even if they did not run, so this can be useful to confirm everything in your load test is running as expected. Comparing the transaction metrics metrics collected for 0.0 to the per-request metrics collected for GET /, you can see that they are the same. This is because in our simple example, our single transaction only makes one request.

In real load tests, you'll most likely have multiple scenarios each with multiple transactions, and Goose will show you metrics for each along with an aggregate of them all together.

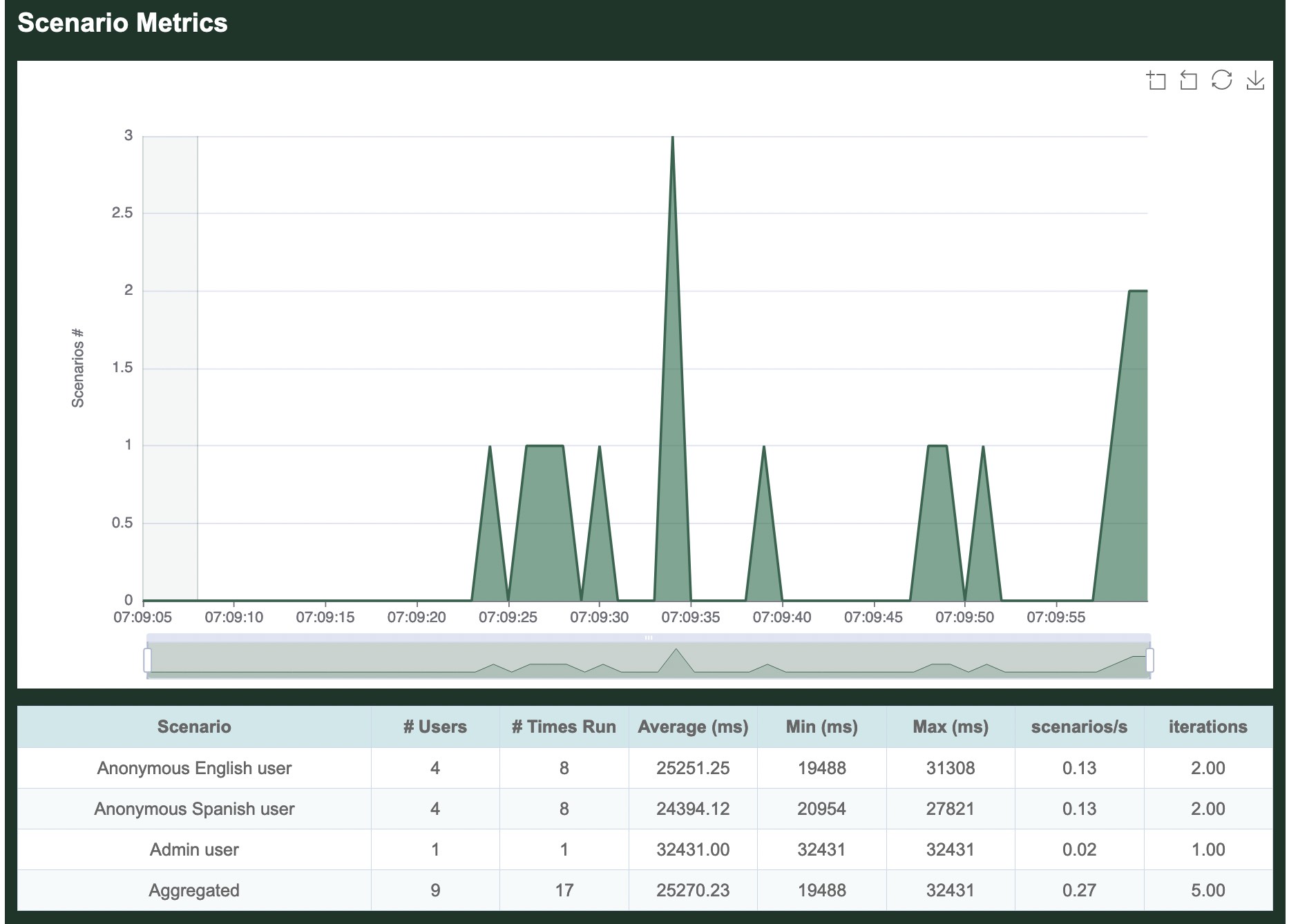

Scenario metrics

Per-scenario metrics follow the per-transaction metrics. This page has has not yet been updated to include a proper example of Scenario metrics.

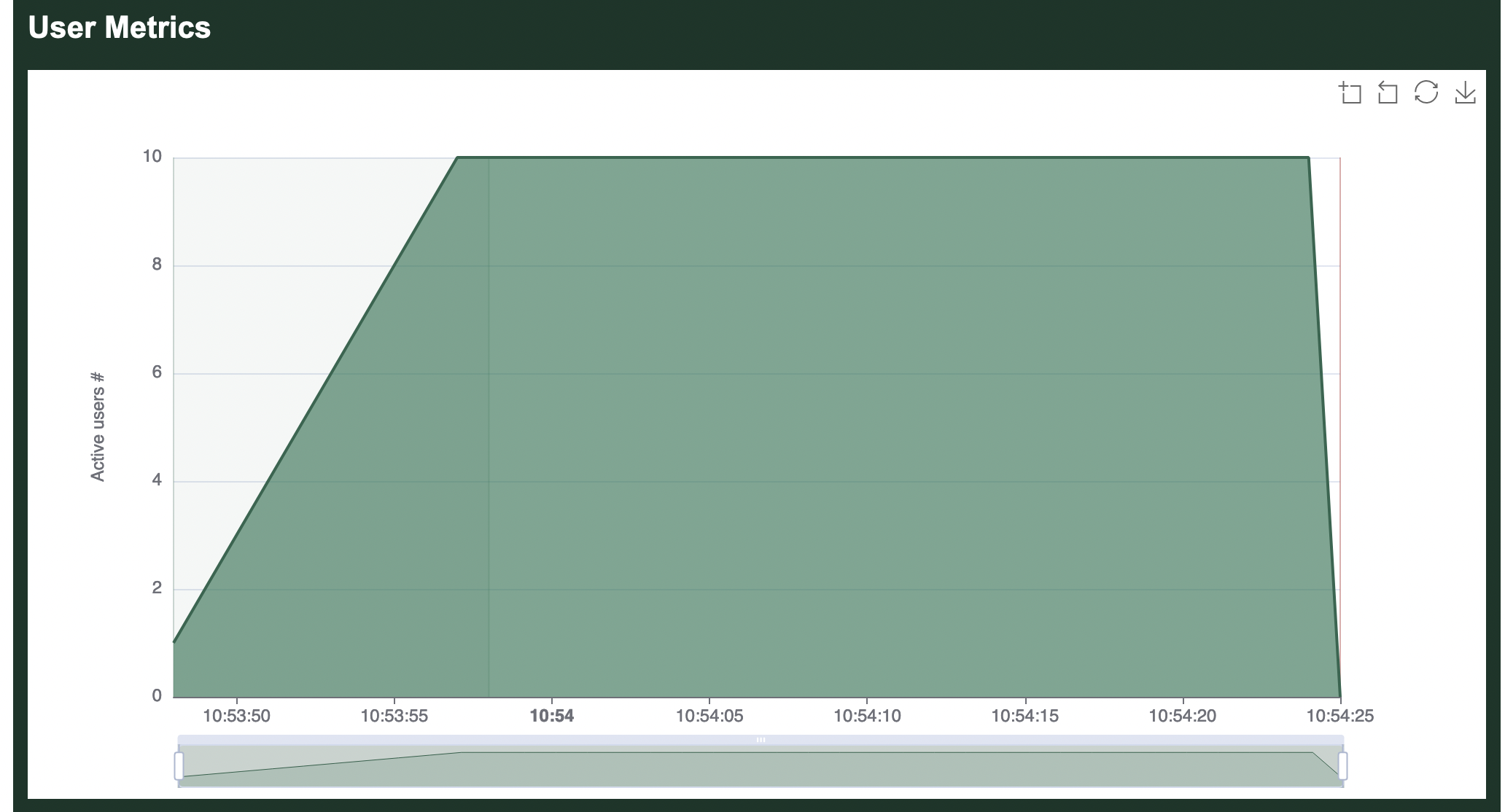

User metrics

Finally comes a chart showing how many users were running during the load test. You can clearly see the 10 users starting 1 per second at the start of the load test, as well as the final second when users quickly stopped.

Refer to the examples included with Goose for more complicated and useful load test examples.

Run-Time Options

The -h flag will show all run-time configuration options available to Goose load tests. For example, you can pass the -h flag to our example loadtest as follows, cargo run --release -- -h:

Usage: target/release/loadtest [OPTIONS]

Goose is a modern, high-performance, distributed HTTP(S) load testing tool,

written in Rust. Visit https://book.goose.rs/ for more information.

The following runtime options are available when launching a Goose load test:

Optional arguments:

-h, --help Displays this help

-V, --version Prints version information

-l, --list Lists all transactions and exits

-H, --host HOST Defines host to load test (ie http://10.21.32.33)

-u, --users USERS Sets concurrent users (default: number of CPUs)

-r, --hatch-rate RATE Sets per-second user hatch rate (default: 1)

-s, --startup-time TIME Starts users for up to (30s, 20m, 3h, 1h30m, etc)

-t, --run-time TIME Stops load test after (30s, 20m, 3h, 1h30m, etc)

-G, --goose-log NAME Enables Goose log file and sets name

-g, --log-level Increases Goose log level (-g, -gg, etc)

-q, --quiet Decreases Goose verbosity (-q, -qq, etc)

-v, --verbose Increases Goose verbosity (-v, -vv, etc)

Metrics:

--running-metrics TIME How often to optionally print running metrics

--no-reset-metrics Doesn't reset metrics after all users have started

--no-metrics Doesn't track metrics

--no-transaction-metrics Doesn't track transaction metrics

--no-scenario-metrics Doesn't track scenario metrics

--no-print-metrics Doesn't display metrics at end of load test

--no-error-summary Doesn't display an error summary

--report-file NAME Create reports, can be used multiple times (supports .html, .htm, .md, .json)

--no-granular-report Disable granular graphs in report file

-R, --request-log NAME Sets request log file name

--request-format FORMAT Sets request log format (csv, json, raw, pretty)

--request-body Include the request body in the request log

-T, --transaction-log NAME Sets transaction log file name

--transaction-format FORMAT Sets log format (csv, json, raw, pretty)

-S, --scenario-log NAME Sets scenario log file name

--scenario-format FORMAT Sets log format (csv, json, raw, pretty)

-E, --error-log NAME Sets error log file name

--error-format FORMAT Sets error log format (csv, json, raw, pretty)

-D, --debug-log NAME Sets debug log file name

--debug-format FORMAT Sets debug log format (csv, json, raw, pretty)

--no-debug-body Do not include the response body in the debug log

--no-status-codes Do not track status code metrics

Advanced:

--test-plan "TESTPLAN" Defines a more complex test plan ("10,60s;0,30s")

--iterations ITERATIONS Sets how many times to run scenarios then exit

--scenarios "SCENARIO" Limits load test to only specified scenarios

--scenarios-list Lists all scenarios and exits

--no-telnet Doesn't enable telnet Controller

--telnet-host HOST Sets telnet Controller host (default: 0.0.0.0)

--telnet-port PORT Sets telnet Controller TCP port (default: 5116)

--no-websocket Doesn't enable WebSocket Controller

--websocket-host HOST Sets WebSocket Controller host (default: 0.0.0.0)

--websocket-port PORT Sets WebSocket Controller TCP port (default: 5117)

--no-autostart Doesn't automatically start load test

--no-gzip Doesn't set the gzip Accept-Encoding header

--timeout VALUE Sets per-request timeout, in seconds (default: 60)

--co-mitigation STRATEGY Sets coordinated omission mitigation strategy

--throttle-requests VALUE Sets maximum requests per second

--sticky-follow Follows base_url redirect with subsequent requests

--accept-invalid-certs Disables validation of https certificates

All of the above configuration options are defined in the developer documentation.

Common Run Time Options

As seen on the previous page, Goose has a lot of run time options which can be overwhelming. The following are a few of the more common and more important options to be familiar with. In these examples we only demonstrate one option at a time, but it's generally useful to combine many options.

Host to load test

Load test plans typically contain relative paths, and so Goose must be told which host to run the load test against in order for it to start. This allows a single load test plan to be used for testing different environments, for example "http://local.example.com", "https://qa.example.com", and "https://www.example.com".

Host example

Load test the https://www.example.com domain.

cargo run --release -- -H https://www.example.com

How many users to simulate

By default, Goose will launch one user per available CPU core. Often you will want to simulate considerably more users than this, and this can be done by setting the "--user" run time option.

(Alternatively, you can use --test-plan to build both simple and more complex traffic patterns that can include a varying number of users.)

Users example

Launch 1,000 GooseUsers.

cargo run --release -- -u 1000

Controlling how long it takes Goose to launch all users

There are several ways to configure how long Goose will take to launch all configured GooseUsers. For starters, you can user either --hatch-rate or --startup-time, but not both together. Alternatively, you can use --test-plan to build both simple and more complex traffic patterns that can include varying launch rates.

Specifying the hatch rate

By default, Goose starts one GooseUser per second. So if you configure --users to 10 it will take ten seconds to fully start the load test. If you set --hatch-rate 5 then Goose will start 5 users every second, taking two seconds to start up. If you set --hatch-rate 0.5 then Goose will start 1 user every 2 seconds, taking twenty seconds to start all 10 users.

(The configured hatch rate is a best effort limit, Goose will not start users faster than this but there is no guarantee that your load test server is capable of starting users as fast as you configure.)

Hatch rate example

Launch one user every two seconds.

cargo run --release -- -r .5

Specifying the total startup time

Alternatively, you can tell Goose how long you'd like it to take to start all GooseUsers. So, if you configure --users to 10 and set --startup-time 10 it will launch 1 user every second. If you set --startup-time 1m it will start 1 user every 6 seconds, starting all users over one minute. And if you set --startup-time 2s it will launch five users per second, launching all users in two seconds.

(The configured startup time is a best effort limit, Goose will not start users faster than this but there is no guarantee that your load test server is capable of starting users as fast as you configure.)

Startup time example

Launch all users in 5 seconds.

cargo run --release -- -s 5

Specifying how long the load test will run

The --run-time option is not affected by how long Goose takes to start up. Thus, if you configure a load test with --users 100 --startup-time 30m --run-time 5m Goose will run for a total of 35 minutes, first ramping up for 30 minutes and then running at full load for 5 minutes. If you want Goose to exit immediately after all users start, you can set a very small run time, for example --users 100 --hatch-rate .25 --run-time 1s.

Alternatively, you can use --test-plan to build both simple and more complex traffic patterns and can define how long the load test runs.

A final option is to instead use the --iterations option to configure how many times GooseUsers will run through their assigned Scenario before exiting.

If you do not configure a run time, Goose will run until it's canceled with ctrl-c.

Run time example

Run the load test for 30 minutes.

cargo run --release -- -t 30m

Iterations example

Each GooseUser will take as long as it takes to fully run its assigned Scenario 5 times and then stop.

cargo run --release -- --iterations 5

Writing An HTML-formatted Report

By default, Goose displays text-formatted metrics when a load test finishes.

It can also optionally write one or more reports in HTML, Markdown, or JSON format. For that, you need to provide one or more --report-file <FILE> run-time options. All requested reports will be written.

The value of <FILE> is an absolute or relative path to the report file to generate. The file extension will evaluate the type of report to write. Any file that already exists at the specified path will be overwritten.

For more information, see Metrics Reports.

HTML report example

Write an HTML-formatted report to report.html when the load test finishes.

cargo run --release -- --report-file report.html

HTML & Markdown report example

Write a Markdown and an HTML-formatted report when the load test finishes.

cargo run --release -- --report-file report.md --report-file report.html

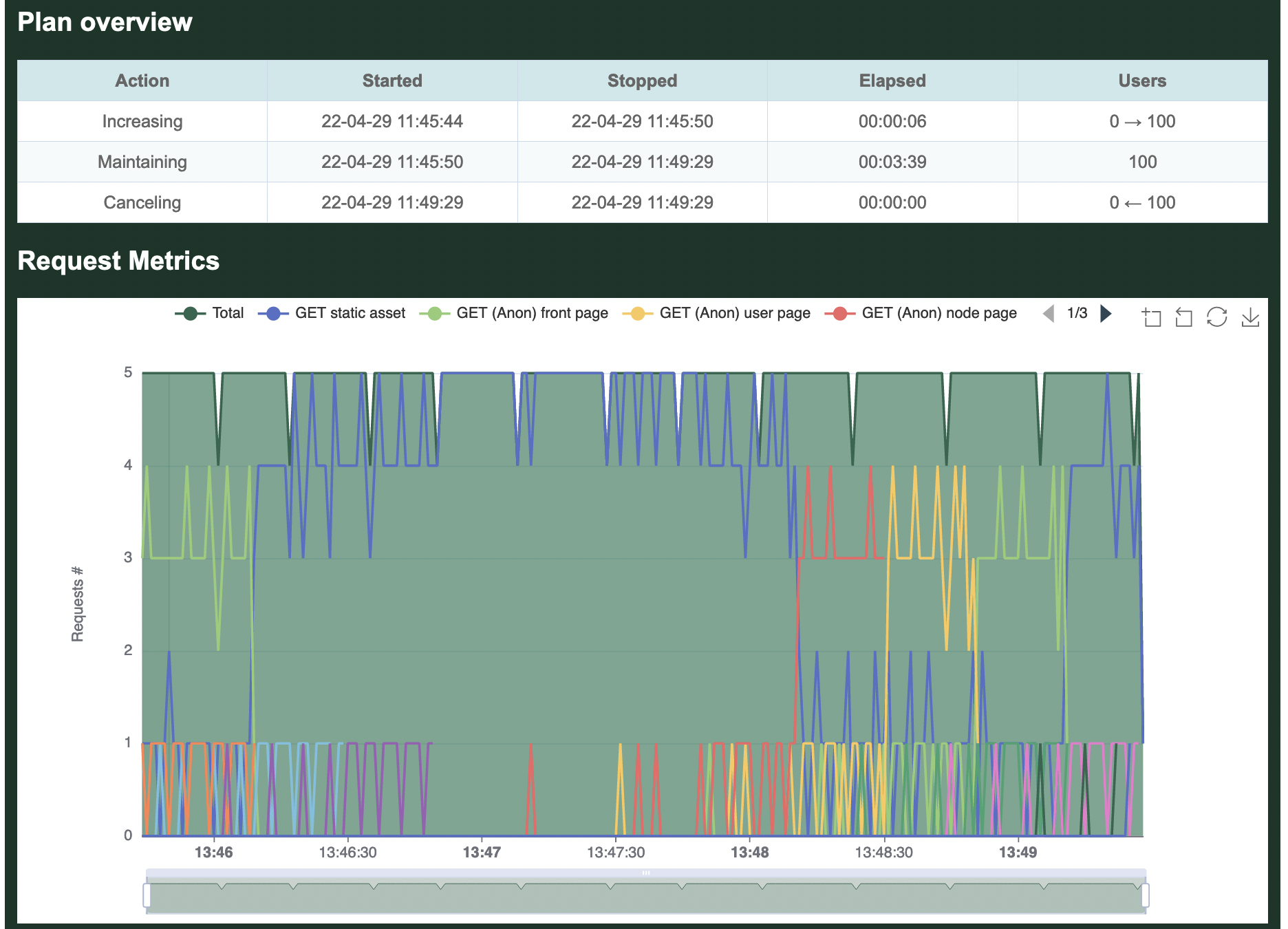

Test Plan

A load test that ramps up to full strength and then runs for a set amount of time can be configured by combining the --startup-time or --hatch-rate options together with the --users and --run-time options. For more complex load patterns you must instead use the --test-plan option.

A test plan is defined as a series of numerical pairs that each defines a number of users, and the amount of time to ramp to this number of users. For example, 10,60s means "launch 10 users over 60 seconds". By stringing together multiple pairs separated by a semicolon you can define more complex test plans. For example, 10,1m;10,5m;0,0s means "launch 10 users over 1 minute, continue with 10 users for 5 minutes, then shut down the load test as quickly as possible".

The amount of time can be defined in seconds (e.g. 10,5s), minutes (e.g. 10,15m) or hours (e.g. 10,1h). The "s/m/h" notation is optional and seconds will be assumed if omitted. However, the explicit notation is recommended, since Goose will be able to detect any mistakes if used.

Simple Example

The following command tells Goose to start 10 users over 60 seconds and then to run for 5 minutes before shutting down:

$ cargo run --release -- -H http://local.dev/ --startup-time 1m --users 10 --run-time 5m --no-reset-metrics

The exact same behaviour can be defined with the following test plan:

$ cargo run --release -- -H http://local.dev/ --test-plan "10,1m;10,5m;0,0s"

Ramp Down Example

Goose will stop a load test as quickly as it can when the specified --run-time completes. To instead configure a load test to ramp down slowly you can use a test plan. In the following example, Goose starts 1000 users in 2 minutes and then slowly stops them over 500 seconds (stopping 2 users per second):

$ cargo run --release -- -H http://local.dev/ --test-plan "1000,2m;0,500s"

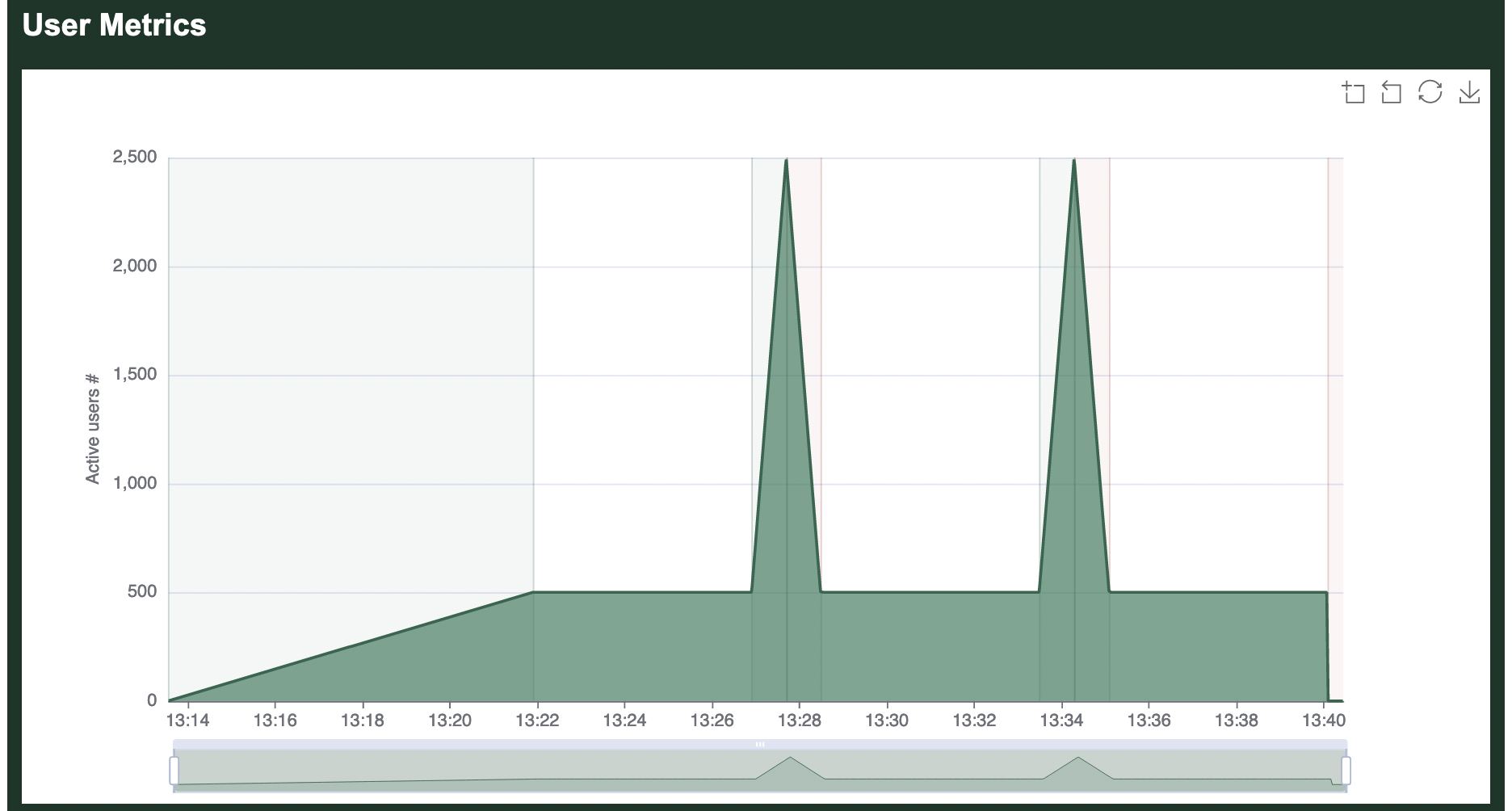

Load Spike Example

Another possibility when specifying a test plan is to add load spikes into otherwise steady load. For example, in the following example Goose starts 500 users over 5 minutes and lets it run with a couple of traffic spikes to 2,500 users:

$ cargo run --release -- -H http://local.dev/ --test-plan "500,5m;500,5m;2500,45s;500,45s;500,5m;2500,45s;500,45s;500,5m;0,0s"

Internals

Internally, Goose converts the test plan into a vector of usize tuples, Vec<(usize, usize)>, where the first integer reflects the number of users to be running and the second integer reflects the time in milliseconds. You can see the internal representation when you start a load test, for example:

% cargo run --release --example simple -- --no-autostart --test-plan "100,30s;100,1h" | grep test_plan

13:54:35 [INFO] test_plan = GooseTestPlan { test_plan: [(100, 30000), (100, 3600000)] }

Throttling Requests

By default, Goose will generate as much load as it can. If this is not desirable, the throttle allows optionally limiting the maximum number of requests per second made during a load test. This can be helpful to ensure consistency when running a load test from multiple different servers with different available resources.

The throttle is specified as an integer and imposes a maximum number of requests, not a minimum number of requests.

Example

In this example, Goose will launch 100 GooseUser threads, but the throttle will prevent them from generating a combined total of more than 5 requests per second.

$ cargo run --release -- -H http://local.dev/ -u100 -r20 --throttle-requests 5

Limiting Which Scenarios Run

It can often be useful to run only a subset of the Scenarios defined by a load test. Instead of commenting them out in the source code and recompiling, the --scenarios run-time option allows you to dynamically control which Scenarios are running.

Listing Scenarios By Machine Name

To ensure that each scenario has a unique name, you must use the machine name of the scenario when filtering which are running. For example, using the Umami example enable the --scenarios-list flag:

% cargo run --release --example umami -- --scenarios-list

Finished release [optimized] target(s) in 0.15s

Running `target/release/examples/umami --scenarios-list`

05:24:03 [INFO] Output verbosity level: INFO

05:24:03 [INFO] Logfile verbosity level: WARN

05:24:03 [INFO] users defaulted to number of CPUs = 10

05:24:03 [INFO] iterations = 0

Scenarios:

- adminuser: ("Admin user")

- anonymousenglishuser: ("Anonymous English user")

- anonymousspanishuser: ("Anonymous Spanish user")

What Is A Machine Name: It is possible to name your Scenarios pretty much anything you want in your load test, including even using the same identical name for multiple Scenarios. A machine name ensures that you can still identify each Scenario uniquely, and without any special characters that can be difficult or insecure to pass through the command line. A machine name is made up of only the alphanumeric characters found in your Scenario's full name, and optionally with a number appended to differentiate between multiple Scenarios that would otherwise have the same name.

In the following example, we have three very similarly named Scenarios. One simply has an extra white space between words. The second has an airplane emoticon in the name. Both the extra space and the airplane symbol are stripped away from the machine name as they are not alphanumerics, and instead

_1and_2are appended to the end to differentiate:Scenarios: - loadtesttransactions: ("LoadtestTransactions") - loadtesttransactions_1: ("Loadtest Transactions") - loadtesttransactions_2: ("LoadtestTransactions ✈️")

Running Scenarios By Machine Name

It is now possible to run any subset of the above scenarios by passing a comma separated list of machine names with the --scenarios run time option. Goose will match what you have typed against any machine name containing all or some of the typed text, so you do not have to type the full name. For example, to run only the two anonymous Scenarios, you could add --scenarios anon:

% cargo run --release --example umami -- --hatch-rate 10 --scenarios anon

Finished release [optimized] target(s) in 0.15s

Running `target/release/examples/umami --hatch-rate 10 --scenarios anon`

05:50:17 [INFO] Output verbosity level: INFO

05:50:17 [INFO] Logfile verbosity level: WARN

05:50:17 [INFO] users defaulted to number of CPUs = 10

05:50:17 [INFO] hatch_rate = 10

05:50:17 [INFO] iterations = 0

05:50:17 [INFO] scenarios = Scenarios { active: ["anon"] }

05:50:17 [INFO] host for Anonymous English user configured: https://drupal-9.ddev.site/

05:50:17 [INFO] host for Anonymous Spanish user configured: https://drupal-9.ddev.site/

05:50:17 [INFO] host for Admin user configured: https://drupal-9.ddev.site/

05:50:17 [INFO] allocating transactions and scenarios with RoundRobin scheduler

05:50:17 [INFO] initializing 10 user states...

05:50:17 [INFO] WebSocket controller listening on: 0.0.0.0:5117

05:50:17 [INFO] Telnet controller listening on: 0.0.0.0:5116

05:50:17 [INFO] entering GooseAttack phase: Increase

05:50:17 [INFO] launching user 1 from Anonymous Spanish user...

05:50:18 [INFO] launching user 2 from Anonymous English user...

05:50:18 [INFO] launching user 3 from Anonymous Spanish user...

05:50:18 [INFO] launching user 4 from Anonymous English user...

05:50:18 [INFO] launching user 5 from Anonymous Spanish user...

05:50:18 [INFO] launching user 6 from Anonymous English user...

05:50:18 [INFO] launching user 7 from Anonymous Spanish user...

^C05:50:18 [WARN] caught ctrl-c, stopping...

Or, to run only the "Anonymous Spanish user" and "Admin user" Scenarios, you could add --senarios "spanish,admin":

% cargo run --release --example umami -- --hatch-rate 10 --scenarios "spanish,admin"

Compiling goose v0.18.1 (/Users/jandrews/devel/goose)

Finished release [optimized] target(s) in 11.79s

Running `target/release/examples/umami --hatch-rate 10 --scenarios spanish,admin`

05:53:45 [INFO] Output verbosity level: INFO

05:53:45 [INFO] Logfile verbosity level: WARN

05:53:45 [INFO] users defaulted to number of CPUs = 10

05:53:45 [INFO] hatch_rate = 10

05:53:45 [INFO] iterations = 0

05:53:45 [INFO] scenarios = Scenarios { active: ["spanish", "admin"] }

05:53:45 [INFO] host for Anonymous English user configured: https://drupal-9.ddev.site/

05:53:45 [INFO] host for Anonymous Spanish user configured: https://drupal-9.ddev.site/

05:53:45 [INFO] host for Admin user configured: https://drupal-9.ddev.site/

05:53:45 [INFO] allocating transactions and scenarios with RoundRobin scheduler

05:53:45 [INFO] initializing 10 user states...

05:53:45 [INFO] Telnet controller listening on: 0.0.0.0:5116

05:53:45 [INFO] WebSocket controller listening on: 0.0.0.0:5117

05:53:45 [INFO] entering GooseAttack phase: Increase

05:53:45 [INFO] launching user 1 from Anonymous Spanish user...

05:53:45 [INFO] launching user 2 from Admin user...

05:53:45 [INFO] launching user 3 from Anonymous Spanish user...

05:53:45 [INFO] launching user 4 from Anonymous Spanish user...

05:53:45 [INFO] launching user 5 from Anonymous Spanish user...

05:53:45 [INFO] launching user 6 from Anonymous Spanish user...

05:53:45 [INFO] launching user 7 from Anonymous Spanish user...

05:53:45 [INFO] launching user 8 from Anonymous Spanish user...

05:53:45 [INFO] launching user 9 from Anonymous Spanish user...

05:53:46 [INFO] launching user 10 from Anonymous Spanish user...

^C05:53:46 [WARN] caught ctrl-c, stopping...

When the load test completes, you can refer to the Scenario metrics to confirm which Scenarios were enabled, and which were not.

Custom Run Time Options

It can sometimes be necessary to add custom run-time options to your load test. As Goose "owns" the command line, adding another option with gumdrop (used by Goose) or another command line parser can be tricky, as Goose will throw an error if it receives an unexpected command line option. There are two alternatives here.

Environment Variables

One option is to use environment variables. An example of this can be found in the Umami example which uses environment variables to allow the configuration of a custom username and password.

Alternatively, you can use this method to set configurable custom defaults. The earlier example can be enhanced to use an environment variable to set a custom default hostname:

use goose::prelude::*;

async fn loadtest_index(user: &mut GooseUser) -> TransactionResult {

let _goose_metrics = user.get("").await?;

Ok(())

}

#[tokio::main]

async fn main() -> Result<(), GooseError> {

// Get optional custom default hostname from `HOST` environment variable.

let custom_host = match std::env::var("HOST") {

Ok(host) => host,

Err(_) => "".to_string(),

};

GooseAttack::initialize()?

.register_scenario(scenario!("LoadtestTransactions")

.register_transaction(transaction!(loadtest_index))

)

// Set optional custom default hostname.

.set_default(GooseDefault::Host, custom_host.as_str())?

.execute()

.await?;

Ok(())

}This can now be used to set a custom default for the scenario, in this example with no --host set Goose will execute a load test against the hostname defined in HOST:

% HOST="https://local.dev/" cargo run --release

Finished release [optimized] target(s) in 0.07s

Running `target/release/loadtest`

07:28:20 [INFO] Output verbosity level: INFO

07:28:20 [INFO] Logfile verbosity level: WARN

07:28:20 [INFO] users defaulted to number of CPUs = 10

07:28:20 [INFO] iterations = 0

07:28:20 [INFO] host for LoadtestTransactions configured: https://local.dev/

It's still possible to override this custom default from the command line with standard Goose options, for example here the load test will run against the hostname configured by the --host option:

% HOST="http://local.dev/" cargo run --release -- --host https://example.com/

Finished release [optimized] target(s) in 0.07s

Running `target/release/loadtest --host 'https://example.com/'`

07:32:36 [INFO] Output verbosity level: INFO

07:32:36 [INFO] Logfile verbosity level: WARN

07:32:36 [INFO] users defaulted to number of CPUs = 10

07:32:36 [INFO] iterations = 0

07:32:36 [INFO] global host configured: https://example.com/

If the HOST variable and the --host option are not set, Goose will display the expected error:

% cargo run --release

Running `target/release/loadtest`

07:07:45 [INFO] Output verbosity level: INFO

07:07:45 [INFO] Logfile verbosity level: WARN

07:07:45 [INFO] users defaulted to number of CPUs = 10

07:07:45 [INFO] iterations = 0

Error: InvalidOption { option: "--host", value: "", detail: "A host must be defined via the --host option, the GooseAttack.set_default() function, or the Scenario.set_host() function (no host defined for LoadtestTransactions)." }

Command Line Arguments

If you really need to have custom command line arguments, there is a way to make Goose not throw an error due to unexpected arguments. You can do that by, instead of calling GooseAttack::initialize(), using GooseAttack::initialize_with_config. This method differs from the first one in that it does not parse arguments from the command line, but instead takes a GooseConfiguration value as parameter. Since this type has quite a lot of configuration options, with some private fields, currently the only way you can obtain an instance of it is via the Default trait: GooseConfiguration::default().

Note that by initializing the GooseAttack in this way you are preventing Goose from reading command line arguments, so if you want to have the ability of passing the arguments that Goose allows, you will need to parse them and set them in the GooseConfiguration instance. In particular, the --host parameter is mandatory, so don't forget to set it in the configuration somehow.

The example below should illustrate these points:

use goose::config::GooseConfiguration;

#[tokio::main]

async fn main() -> Result<(), GooseError> {

// here we could be using a crate such as `clap` to parse CLI arguments:

let opt = MyCustomConfig::parse();

let mut config = GooseConfiguration::default();

// we added a `host` field to our custom argument parser that matches

// the `host` field used by Goose

config.host = opt.host;

// ... here you should do the same for all the other command line parameters

// offered by Goose that you care about, otherwise they will not be taken

// into account.

// Initialize the `GooseAttack` using the `GooseConfiguration`:

GooseAttack::initialize_with_config(config)?

.register_scenario(

scenario!("User")

.register_transaction(transaction!(loadtest_index))

)

.execute()

.await?;

Ok(())

}Assuming that MyCustomConfig has a my_custom_arg field, the program above can be invoked with a command such as:

cargo run -- --host https://localhost:8080 --my-custom-arg 42

Metrics

Here's sample output generated when running a loadtest, in this case the Umami example that comes with Goose.

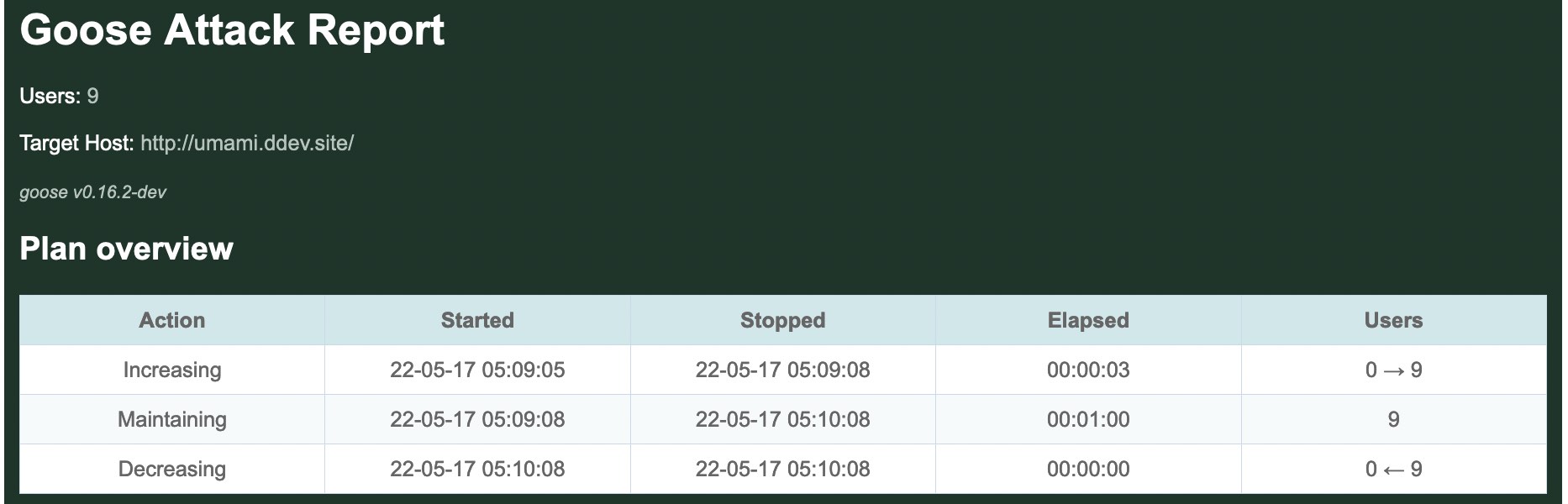

In this case, the Drupal Umami demo was installed in a local container. The following command was used to configure Goose and run the load test. The -u9 tells Goose to spin up 9 users. The -r3 option tells Goose to hatch 3 users per second. The -t1m option tells Goose to run the load test for 1 minute, or 60 seconds. The --no-reset-metrics flag tells Goose to include all metrics, instead of the default which is to flush all metrics collected during start up. And finally, the --report-file report.html tells Goose to generate an HTML-formatted report named report.html once the load test finishes.

ASCII metrics

% cargo run --release --example umami -- --host http://umami.ddev.site/ -u9 -r3 -t1m --no-reset-metrics --report-file report.html

Compiling goose v0.18.1 (~/goose)

Finished release [optimized] target(s) in 11.88s

Running `target/release/examples/umami --host 'http://umami.ddev.site/' -u9 -r3 -t1m --no-reset-metrics --report-file report.html`

05:09:05 [INFO] Output verbosity level: INFO

05:09:05 [INFO] Logfile verbosity level: WARN

05:09:05 [INFO] users = 9

05:09:05 [INFO] run_time = 60

05:09:05 [INFO] hatch_rate = 3

05:09:05 [INFO] no_reset_metrics = true

05:09:05 [INFO] report_file = report.html

05:09:05 [INFO] iterations = 0

05:09:05 [INFO] global host configured: http://umami.ddev.site/

05:09:05 [INFO] allocating transactions and scenarios with RoundRobin scheduler

05:09:05 [INFO] initializing 9 user states...

05:09:05 [INFO] Telnet controller listening on: 0.0.0.0:5116

05:09:05 [INFO] WebSocket controller listening on: 0.0.0.0:5117

05:09:05 [INFO] entering GooseAttack phase: Increase

05:09:05 [INFO] [user 1]: launching user from Anonymous Spanish user

05:09:05 [INFO] [user 2]: launching user from Anonymous English user

05:09:05 [INFO] [user 3]: launching user from Anonymous Spanish user

05:09:06 [INFO] [user 4]: launching user from Anonymous English user

05:09:06 [INFO] [user 5]: launching user from Anonymous Spanish user

05:09:06 [INFO] [user 6]: launching user from Anonymous English user

05:09:07 [INFO] [user 7]: launching user from Admin user

05:09:07 [INFO] [user 8]: launching user from Anonymous Spanish user

05:09:07 [INFO] [user 9]: launching user from Anonymous English user

All 9 users hatched.

05:09:08 [INFO] entering GooseAttack phase: Maintain

05:10:08 [INFO] entering GooseAttack phase: Decrease

05:10:08 [INFO] [user 2]: exiting user from Anonymous English user

05:10:08 [INFO] [user 3]: exiting user from Anonymous Spanish user

05:10:08 [INFO] [user 6]: exiting user from Anonymous English user

05:10:08 [INFO] [user 8]: exiting user from Anonymous Spanish user

05:10:08 [INFO] [user 4]: exiting user from Anonymous English user

05:10:08 [INFO] [user 7]: exiting user from Admin user

05:10:08 [INFO] [user 1]: exiting user from Anonymous Spanish user

05:10:08 [INFO] [user 9]: exiting user from Anonymous English user

05:10:08 [INFO] [user 5]: exiting user from Anonymous Spanish user

05:10:08 [INFO] wrote html report file to: report.html

05:10:08 [INFO] entering GooseAttack phase: Shutdown

05:10:08 [INFO] printing final metrics after 63 seconds...

=== PER SCENARIO METRICS ===

------------------------------------------------------------------------------

Name | # users | # times run | scenarios/s | iterations

------------------------------------------------------------------------------

1: Anonymous English u.. | 4 | 8 | 0.13 | 2.00

2: Anonymous Spanish u.. | 4 | 8 | 0.13 | 2.00

3: Admin user | 1 | 1 | 0.02 | 1.00

-------------------------+----------+--------------+-------------+------------

Aggregated | 9 | 17 | 0.27 | 1.89

------------------------------------------------------------------------------

Name | Avg (ms) | Min | Max | Median

------------------------------------------------------------------------------

1: Anonymous English.. | 25251 | 19,488 | 31,308 | 19,488

2: Anonymous Spanish.. | 24394 | 20,954 | 27,821 | 20,954

3: Admin user | 32431 | 32,431 | 32,431 | 32,431

-------------------------+-------------+------------+-------------+-----------

Aggregated | 25270 | 19,488 | 32,431 | 19,488

=== PER TRANSACTION METRICS ===

------------------------------------------------------------------------------

Name | # times run | # fails | trans/s | fail/s

------------------------------------------------------------------------------

1: Anonymous English user

1: anon / | 21 | 0 (0%) | 0.33 | 0.00

2: anon /en/basicpage | 12 | 0 (0%) | 0.19 | 0.00

3: anon /en/articles/ | 12 | 0 (0%) | 0.19 | 0.00

4: anon /en/articles/% | 21 | 0 (0%) | 0.33 | 0.00

5: anon /en/recipes/ | 12 | 0 (0%) | 0.19 | 0.00

6: anon /en/recipes/% | 36 | 0 (0%) | 0.57 | 0.00

7: anon /node/%nid | 11 | 0 (0%) | 0.17 | 0.00

8: anon /en term | 19 | 0 (0%) | 0.30 | 0.00

9: anon /en/search | 9 | 0 (0%) | 0.14 | 0.00

10: anon /en/contact | 9 | 0 (0%) | 0.14 | 0.00

2: Anonymous Spanish user

1: anon /es/ | 22 | 0 (0%) | 0.35 | 0.00

2: anon /es/basicpage | 12 | 0 (0%) | 0.19 | 0.00

3: anon /es/articles/ | 12 | 0 (0%) | 0.19 | 0.00

4: anon /es/articles/% | 21 | 0 (0%) | 0.33 | 0.00

5: anon /es/recipes/ | 12 | 0 (0%) | 0.19 | 0.00

6: anon /es/recipes/% | 37 | 0 (0%) | 0.59 | 0.00

7: anon /es term | 21 | 0 (0%) | 0.33 | 0.00

8: anon /es/search | 12 | 0 (0%) | 0.19 | 0.00

9: anon /es/contact | 10 | 0 (0%) | 0.16 | 0.00

3: Admin user

1: auth /en/user/login | 1 | 0 (0%) | 0.02 | 0.00

2: auth / | 4 | 0 (0%) | 0.06 | 0.00

3: auth /en/articles/ | 2 | 0 (0%) | 0.03 | 0.00

4: auth /en/node/%/e.. | 3 | 0 (0%) | 0.05 | 0.00

-------------------------+---------------+----------------+----------+--------

Aggregated | 331 | 0 (0%) | 5.25 | 0.00

------------------------------------------------------------------------------

Name | Avg (ms) | Min | Max | Median

------------------------------------------------------------------------------

1: Anonymous English user

1: anon / | 123.48 | 85 | 224 | 110

2: anon /en/basicpage | 56.08 | 44 | 75 | 50

3: anon /en/articles/ | 147.58 | 91 | 214 | 140

4: anon /en/articles/% | 148.14 | 72 | 257 | 160

5: anon /en/recipes/ | 170.58 | 109 | 242 | 150

6: anon /en/recipes/% | 66.08 | 48 | 131 | 60

7: anon /node/%nid | 94.09 | 46 | 186 | 70

8: anon /en term | 134.37 | 52 | 194 | 130

9: anon /en/search | 282.33 | 190 | 339 | 270

10: anon /en/contact | 246.89 | 186 | 346 | 260

2: Anonymous Spanish user

1: anon /es/ | 141.36 | 88 | 285 | 130

2: anon /es/basicpage | 61.17 | 43 | 92 | 51

3: anon /es/articles/ | 130.58 | 87 | 187 | 110

4: anon /es/articles/% | 164.52 | 85 | 263 | 170

5: anon /es/recipes/ | 161.25 | 108 | 274 | 120

6: anon /es/recipes/% | 65.24 | 47 | 107 | 61

7: anon /es term | 145.14 | 49 | 199 | 150

8: anon /es/search | 276.33 | 206 | 361 | 270

9: anon /es/contact | 240.20 | 204 | 297 | 230

3: Admin user

1: auth /en/user/login | 262.00 | 262 | 262 | 262

2: auth / | 260.75 | 238 | 287 | 250

3: auth /en/articles/ | 232.00 | 220 | 244 | 220

4: auth /en/node/%/e.. | 745.67 | 725 | 771 | 725

-------------------------+-------------+------------+-------------+-----------

Aggregated | 141.73 | 43 | 771 | 120

=== PER REQUEST METRICS ===

------------------------------------------------------------------------------

Name | # reqs | # fails | req/s | fail/s

------------------------------------------------------------------------------

GET anon / | 21 | 0 (0%) | 0.33 | 0.00

GET anon /en term | 19 | 0 (0%) | 0.30 | 0.00

GET anon /en/articles/ | 12 | 0 (0%) | 0.19 | 0.00

GET anon /en/articles/% | 21 | 0 (0%) | 0.33 | 0.00

GET anon /en/basicpage | 12 | 0 (0%) | 0.19 | 0.00

GET anon /en/contact | 9 | 0 (0%) | 0.14 | 0.00

GET anon /en/recipes/ | 12 | 0 (0%) | 0.19 | 0.00

GET anon /en/recipes/% | 36 | 0 (0%) | 0.57 | 0.00

GET anon /en/search | 9 | 0 (0%) | 0.14 | 0.00

GET anon /es term | 21 | 0 (0%) | 0.33 | 0.00

GET anon /es/ | 22 | 0 (0%) | 0.35 | 0.00

GET anon /es/articles/ | 12 | 0 (0%) | 0.19 | 0.00

GET anon /es/articles/% | 21 | 0 (0%) | 0.33 | 0.00

GET anon /es/basicpage | 12 | 0 (0%) | 0.19 | 0.00

GET anon /es/contact | 10 | 0 (0%) | 0.16 | 0.00

GET anon /es/recipes/ | 12 | 0 (0%) | 0.19 | 0.00

GET anon /es/recipes/% | 37 | 0 (0%) | 0.59 | 0.00

GET anon /es/search | 12 | 0 (0%) | 0.19 | 0.00

GET anon /node/%nid | 11 | 0 (0%) | 0.17 | 0.00

GET auth / | 4 | 0 (0%) | 0.06 | 0.00

GET auth /en/articles/ | 2 | 0 (0%) | 0.03 | 0.00

GET auth /en/node/%/edit | 6 | 0 (0%) | 0.10 | 0.00

GET auth /en/user/login | 1 | 0 (0%) | 0.02 | 0.00

GET static asset | 3,516 | 0 (0%) | 55.81 | 0.00

POST anon /en/contact | 9 | 0 (0%) | 0.14 | 0.00

POST anon /en/search | 9 | 0 (0%) | 0.14 | 0.00

POST anon /es/contact | 10 | 0 (0%) | 0.16 | 0.00

POST anon /es/search | 12 | 0 (0%) | 0.19 | 0.00

POST auth /en/node/%/e.. | 3 | 0 (0%) | 0.05 | 0.00

POST auth /en/user/login | 1 | 0 (0%) | 0.02 | 0.00

-------------------------+---------------+----------------+----------+--------

Aggregated | 3,894 | 0 (0%) | 61.81 | 0.00

------------------------------------------------------------------------------

Name | Avg (ms) | Min | Max | Median

------------------------------------------------------------------------------

GET anon / | 38.95 | 14 | 132 | 24

GET anon /en term | 95.63 | 22 | 159 | 98

GET anon /en/articles/ | 61.67 | 16 | 139 | 42

GET anon /en/articles/% | 94.86 | 20 | 180 | 100

GET anon /en/basicpage | 25.67 | 17 | 40 | 24

GET anon /en/contact | 34.67 | 16 | 61 | 30

GET anon /en/recipes/ | 59.83 | 17 | 130 | 45

GET anon /en/recipes/% | 27.86 | 16 | 56 | 22

GET anon /en/search | 54.33 | 20 | 101 | 30

GET anon /es term | 106.14 | 19 | 159 | 110

GET anon /es/ | 51.41 | 18 | 179 | 29

GET anon /es/articles/ | 53.42 | 17 | 110 | 27

GET anon /es/articles/% | 105.52 | 20 | 203 | 110

GET anon /es/basicpage | 27.25 | 18 | 55 | 22

GET anon /es/contact | 27.80 | 17 | 49 | 24

GET anon /es/recipes/ | 59.08 | 18 | 165 | 26

GET anon /es/recipes/% | 28.65 | 16 | 61 | 26

GET anon /es/search | 46.42 | 17 | 99 | 25

GET anon /node/%nid | 52.73 | 17 | 133 | 38

GET auth / | 140.75 | 109 | 169 | 120

GET auth /en/articles/ | 103.50 | 89 | 118 | 89

GET auth /en/node/%/edit | 114.83 | 91 | 136 | 120

GET auth /en/user/login | 24.00 | 24 | 24 | 24

GET static asset | 5.11 | 2 | 38 | 5

POST anon /en/contact | 136.67 | 99 | 204 | 140

POST anon /en/search | 162.11 | 114 | 209 | 170

POST anon /es/contact | 137.70 | 111 | 174 | 130

POST anon /es/search | 164.08 | 118 | 235 | 140

POST auth /en/node/%/e.. | 292.33 | 280 | 304 | 290

POST auth /en/user/login | 143.00 | 143 | 143 | 143

-------------------------+-------------+------------+-------------+-----------

Aggregated | 11.41 | 2 | 304 | 5

------------------------------------------------------------------------------

Slowest page load within specified percentile of requests (in ms):

------------------------------------------------------------------------------

Name | 50% | 75% | 98% | 99% | 99.9% | 99.99%

------------------------------------------------------------------------------

GET anon / | 24 | 29 | 130 | 130 | 130 | 130

GET anon /en term | 98 | 110 | 159 | 159 | 159 | 159

GET anon /en/articles/ | 42 | 92 | 139 | 139 | 139 | 139

GET anon /en/articles/% | 100 | 120 | 180 | 180 | 180 | 180

GET anon /en/basicpage | 24 | 30 | 40 | 40 | 40 | 40

GET anon /en/contact | 30 | 46 | 61 | 61 | 61 | 61

GET anon /en/recipes/ | 45 | 88 | 130 | 130 | 130 | 130

GET anon /en/recipes/% | 22 | 31 | 55 | 56 | 56 | 56

GET anon /en/search | 30 | 89 | 100 | 100 | 100 | 100

GET anon /es term | 110 | 130 | 159 | 159 | 159 | 159

GET anon /es/ | 29 | 57 | 179 | 179 | 179 | 179

GET anon /es/articles/ | 27 | 96 | 110 | 110 | 110 | 110

GET anon /es/articles/% | 110 | 140 | 200 | 200 | 200 | 200

GET anon /es/basicpage | 22 | 27 | 55 | 55 | 55 | 55

GET anon /es/contact | 24 | 35 | 49 | 49 | 49 | 49

GET anon /es/recipes/ | 26 | 110 | 165 | 165 | 165 | 165

GET anon /es/recipes/% | 26 | 34 | 57 | 61 | 61 | 61

GET anon /es/search | 25 | 78 | 99 | 99 | 99 | 99

GET anon /node/%nid | 38 | 41 | 130 | 130 | 130 | 130

GET auth / | 120 | 160 | 169 | 169 | 169 | 169

GET auth /en/articles/ | 89 | 118 | 118 | 118 | 118 | 118

GET auth /en/node/%/edit | 120 | 130 | 136 | 136 | 136 | 136

GET auth /en/user/login | 24 | 24 | 24 | 24 | 24 | 24

GET static asset | 5 | 6 | 10 | 13 | 29 | 38

POST anon /en/contact | 140 | 150 | 200 | 200 | 200 | 200

POST anon /en/search | 170 | 180 | 209 | 209 | 209 | 209

POST anon /es/contact | 130 | 150 | 170 | 170 | 170 | 170

POST anon /es/search | 140 | 180 | 235 | 235 | 235 | 235

POST auth /en/node/%/e.. | 290 | 290 | 300 | 300 | 300 | 300

POST auth /en/user/login | 143 | 143 | 143 | 143 | 143 | 143

-------------------------+--------+--------+--------+--------+--------+-------

Aggregated | 5 | 7 | 120 | 140 | 240 | 300

------------------------------------------------------------------------------

Name | Status codes

------------------------------------------------------------------------------

GET anon / | 21 [200]

GET anon /en term | 19 [200]

GET anon /en/articles/ | 12 [200]

GET anon /en/articles/% | 21 [200]

GET anon /en/basicpage | 12 [200]

GET anon /en/contact | 9 [200]

GET anon /en/recipes/ | 12 [200]

GET anon /en/recipes/% | 36 [200]

GET anon /en/search | 9 [200]

GET anon /es term | 21 [200]

GET anon /es/ | 22 [200]

GET anon /es/articles/ | 12 [200]

GET anon /es/articles/% | 21 [200]

GET anon /es/basicpage | 12 [200]

GET anon /es/contact | 10 [200]

GET anon /es/recipes/ | 12 [200]

GET anon /es/recipes/% | 37 [200]

GET anon /es/search | 12 [200]

GET anon /node/%nid | 11 [200]

GET auth / | 4 [200]

GET auth /en/articles/ | 2 [200]

GET auth /en/node/%/edit | 6 [200]

GET auth /en/user/login | 1 [200]

GET static asset | 3,516 [200]

POST anon /en/contact | 9 [200]

POST anon /en/search | 9 [200]

POST anon /es/contact | 10 [200]

POST anon /es/search | 12 [200]

POST auth /en/node/%/e.. | 3 [200]

POST auth /en/user/login | 1 [200]

-------------------------+----------------------------------------------------

Aggregated | 3,894 [200]

=== OVERVIEW ===

------------------------------------------------------------------------------

Action Started Stopped Elapsed Users

------------------------------------------------------------------------------

Increasing: 2022-05-17 07:09:05 - 2022-05-17 07:09:08 (00:00:03, 0 -> 9)

Maintaining: 2022-05-17 07:09:08 - 2022-05-17 07:10:08 (00:01:00, 9)

Decreasing: 2022-05-17 07:10:08 - 2022-05-17 07:10:08 (00:00:00, 0 <- 9)

Target host: http://umami.ddev.site/

goose v0.18.1

------------------------------------------------------------------------------

Metrics reports

In addition to the above metrics displayed on the CLI, we've also told Goose to create reports on other formats, like Markdown, JSON, or HTML.

It is possible to create one or more reports at the same time, using one or more --report-file arguments. The type of report is chosen by the file extension. An unsupported file extension will lead to an error.

The following subsections describe the reports on more detail.

HTML report

Overview

The HTML report starts with a brief overview table, offering the same information found in the ASCII overview above:

NOTE: The HTML report includes some graphs that rely on the eCharts JavaScript library. The HTML report loads the library via CDN, which means that the graphs won't be loaded correctly if the CDN is not accessible.

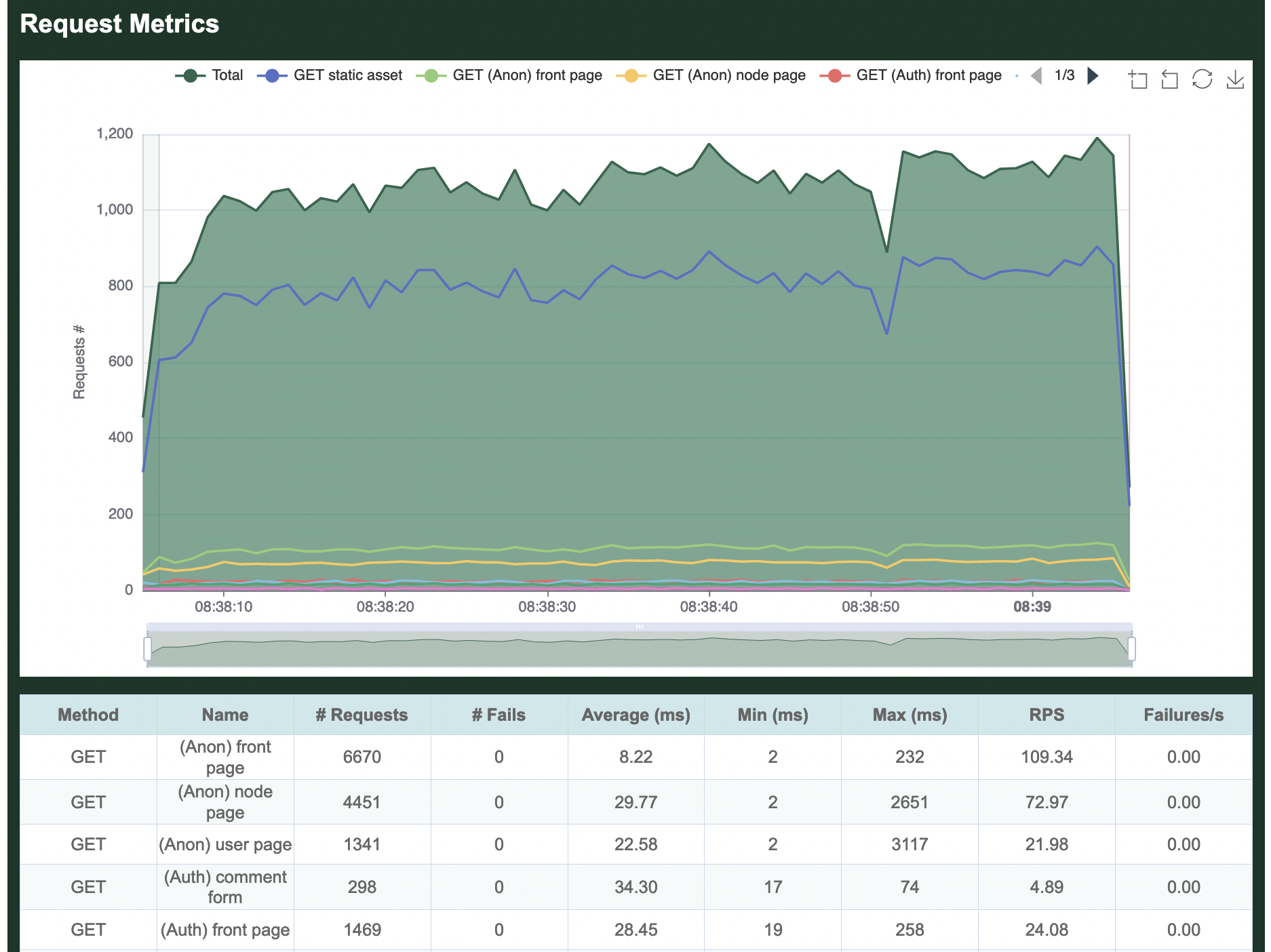

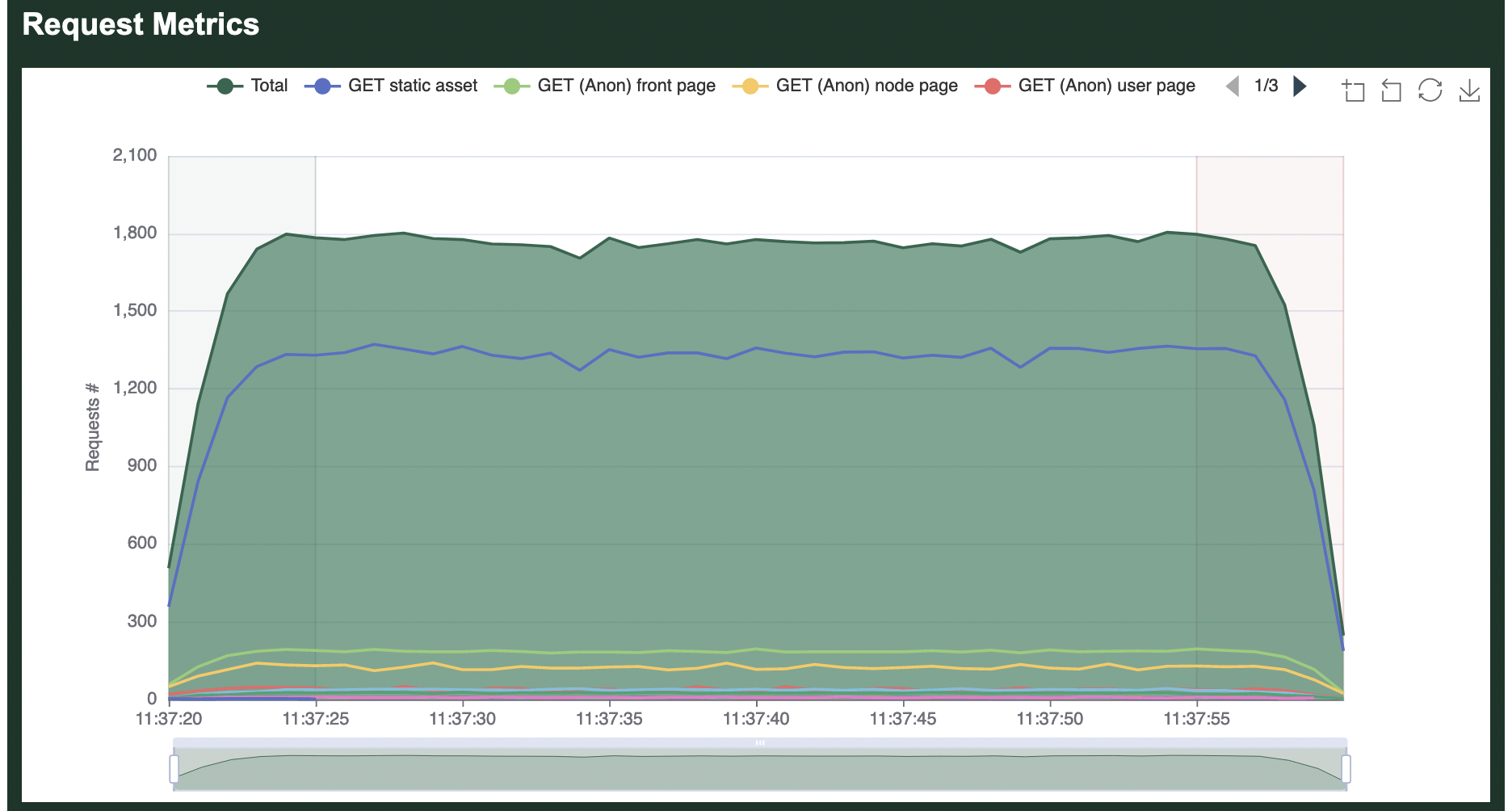

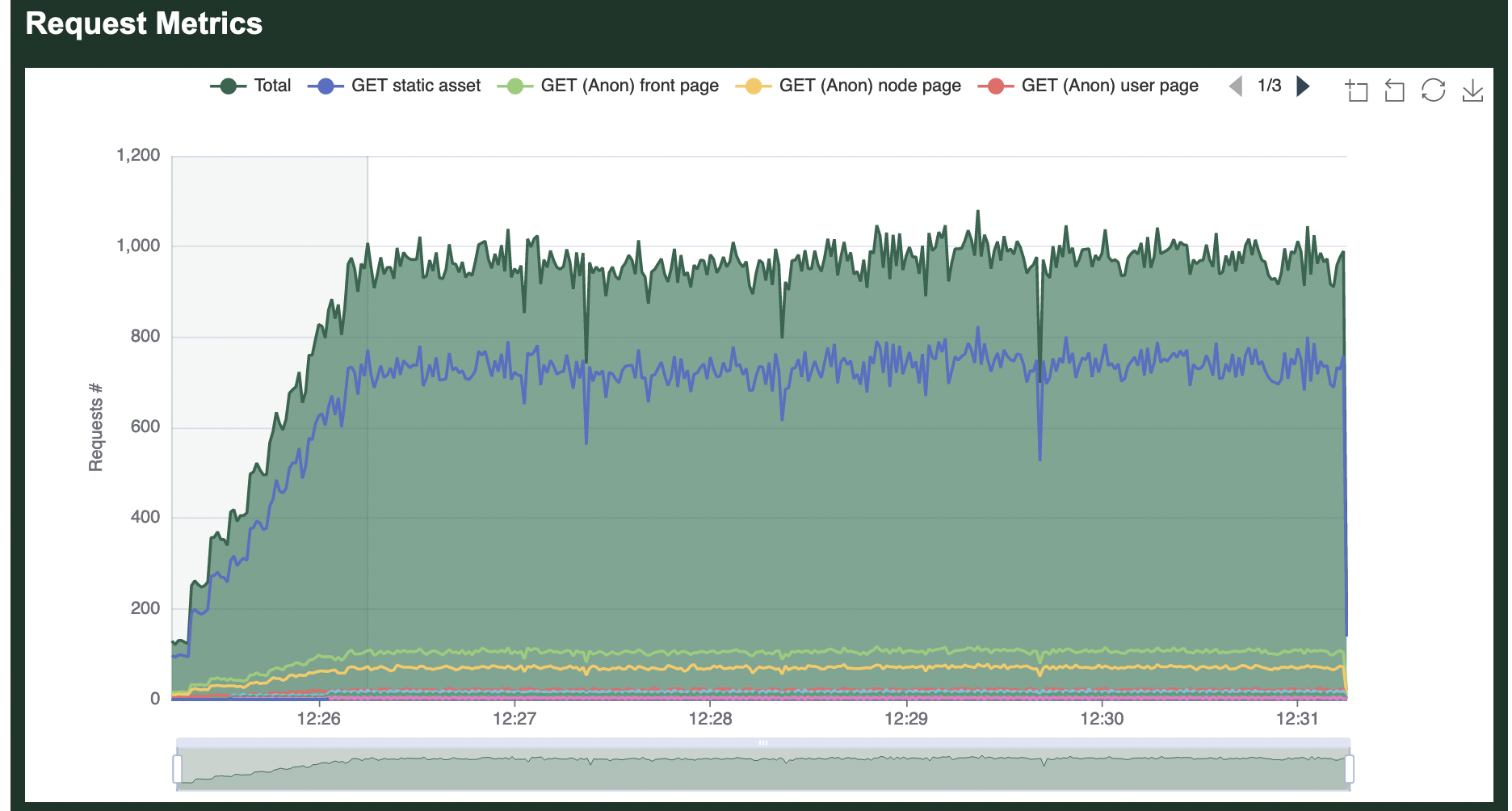

Requests

Next the report includes a graph of all requests made during the duration of the load test. By default, the graph includes an aggregated average, as well as per-request details. It's possible to click on the request names at the top of the graph to hide/show specific requests on the graphs. In this case, the graph shows that most requests made by the load test were for static assets.

Below the graph is a table that shows per-request details, only partially included in this screenshot:

Response times

The next graph shows the response times measured for each request made. In the following graph, it's apparent that POST requests had the slowest responses, which is logical as they are not cached. As before, it's possible to click on the request names at the top of the graph to hide/show details about specific requests.

Below the graph is a table that shows per-request details:

Status codes

All status codes returned by the server are displayed in a table, per-request and in aggregate. In our simple test, we received only 200 OK responses.

Transactions

The next graph summarizes all Transactions run during the load test. One or more requests are grouped logically inside Transactions. For example, the Transaction named 0.0 anon / includes an anonymous (not-logged-in) request for the front page, as well as requests for all static assets found on the front page.

Whereas a Request automatically fails based on the web server response code, the code that defines a Transaction must manually return an error for a Task to be considered failed. For example, the logic may be written to fail the Transaction of the html request fails, but not if one or more static asset requests fail.

This graph is also followed by a table showing details on all Transactions, partially shown here:

Scenarios

The next graph summarizes all Scenarios run during the load test. One or more Transactions are grouped logically inside Scenarios.

For example, the Scenario named Anonymous English user includes the above anon / Transaction, the anon /en/basicpage, and all the rest of the Transactions requesting pages in English.

It is followed by a table, shown in entirety here because this load test only has 3 Scenarios. The # Users column indicates how many GooseUser threads were assigned to run this Scenario during the load test. The # Times Run column indicates how many times in aggregate all GooseUser threads ran completely through the Scenario. From there you can see how long on average it took a GooseUser thread to run through all Transactions and make all contained Requests to completely run the Scenario, as well as the minimum and maximum amount of time. Finally, Iterations is how many times each assigned GooseUser thread ran through the entire Scenario (Iterations times the # of Users will always equal the total # of times run).

As our example only ran for 60 seconds, and the Admin user Scenario took >30 seconds to run once, the load test only ran completely through this scenario one time, also reflected in the following table:

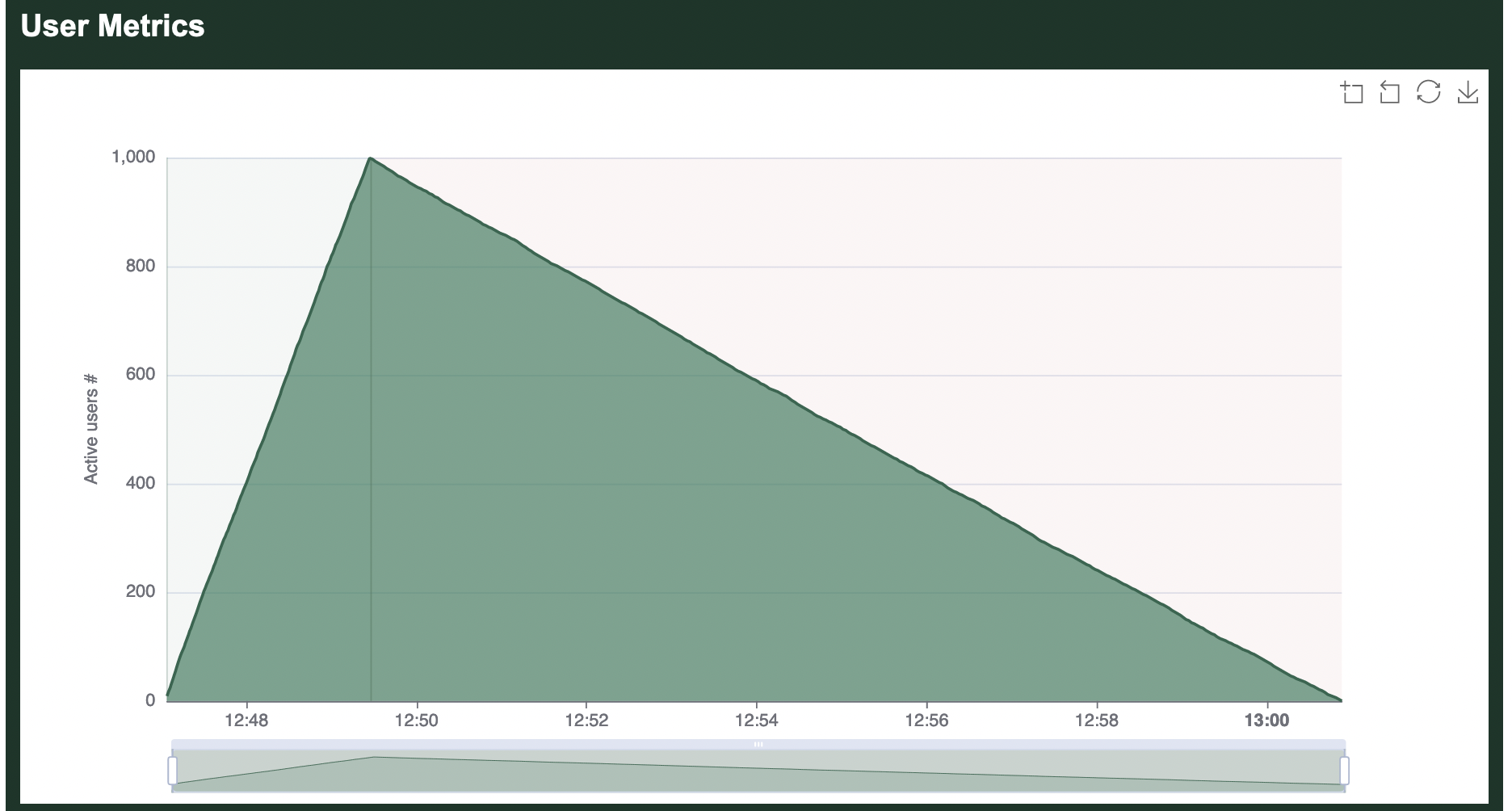

Users

The final graph shows how many users were running at the various stages of the load test. As configured, Goose quickly ramped up to 9 users, then sustained that level of traffic for a minute before shutting down:

Markdown report

The Markdown report follows the structure of the HTML report. However, it does not include the chart elements.

JSON report

The JSON report is a dump of the internal metrics collection. It is a JSON serialization of the ReportData structure. Mainly having a field named raw_metrics, carrying the content of GooseMetrics.

Developer documentation

Additional details about how metrics are collected, stored, and displayed can be found in the developer documentation.

Tips

Best Practices

- When writing load tests, avoid

unwrap()(and variations) in your transaction functions -- Goose generates a lot of load, and this tends to trigger errors. Embrace Rust's warnings and properly handle all possible errors, this will save you time debugging later. - When running your load test, use the cargo

--releaseflag to generate optimized code. This can generate considerably more load test traffic. Learn more about this and other optimizations in "The golden Goose egg, a compile-time adventure".

Errors

Timeouts

By default, Goose will time out requests that take longer than 60 seconds to return, and display a WARN level message saying, "operation timed out". For example:

11:52:17 [WARN] "/node/3672": error sending request for url (http://apache/node/3672): operation timed out

These will also show up in the error summary displayed with the final metrics. For example:

=== ERRORS ===

------------------------------------------------------------------------------

Count | Error

------------------------------------------------------------------------------

51 GET (Auth) comment form: error sending request (Auth) comment form: operation timed out

To change how long before requests time out, use --timeout VALUE when starting a load test, for example --timeout 30 will time out requests that take longer than 30 seconds to return. To configure the timeout programatically, use .set_default() to set GooseDefault::Timeout.

To completely disable timeouts, you must build a custom Reqwest Client with GooseUser::set_client_builder. Alternatively, you can just set a very high timeout, for example --timeout 86400 will let a request take up to 24 hours.

Debugging HTML Responses

Sometimes, while developing and debugging a load test we'd like to view HTML responses in a browser to actually see where each request is actually taking us. We may want to run this test with one user to avoid debug noise.

We can create a debug log by passing the --debug-log NAME command line option.

Each row in the debug log defaults to a JSON object and we can use jq for processing JSON or the faster Rust port that supports the same commands jaq

To extract the HTML response from the first log entry, for example, you could use the following commands:

cat debug.log | head -n 1 | jaq -r .body > page.html

This HTML page can then be viewed in a web browser. You may need to disable JavaScript.

Killswitch

Goose provides a killswitch mechanism to programmatically stop a load test when specific conditions are met. This is useful for protecting your systems and ensuring tests stop automatically when problems are detected.

Common Use Cases

- Error Rate Threshold: Stop when error rate exceeds acceptable limits

- Response Time SLA Monitoring: Halt testing when latency violates requirements

- Health Check Integration: Monitor system health endpoints and stop on failure

- Resource Exhaustion Detection: Stop when detecting connection pool or memory issues

- Sitemap Traversal Completion: Stop after fully crawling a site's pages

- Data Set Processing: Stop when all test data has been consumed

- External Signal Integration: Stop based on monitoring system alerts

Example: Service Unavailable Detection

#![allow(unused)] fn main() { use goose::prelude::*; async fn check_availability(user: &mut GooseUser) -> TransactionResult { let mut response = user.get("/api/endpoint").await?; // Stop the test if server returns 503 Service Unavailable if let Ok(response) = response.response { if response.status() == 503 { goose::trigger_killswitch("Server returned 503: Service Unavailable"); } } Ok(()) } }

You can also check if the killswitch has been triggered using goose::is_killswitch_triggered() to conditionally execute cleanup code or skip certain operations.

Logging

With logging, it's possible to record all Goose activity. This can be useful for debugging errors, for validating the load test, and for creating graphs.

When logging is enabled, a central logging thread maintains a buffer to minimize the IO overhead, and controls the writing to ensure that multiple threads don't corrupt each other's messages. All log messages are sent through a channel to the logging thread and written asynchronously, minimizing the impact on the load test.

Request Log

Goose can optionally log details about all the requests made during the load test to a file. This log file contains the running metrics Goose generates as the load test runs. To enable, add the --request-log <request.log> command line option, where <request.log> is either a relative or absolute path of the log file to create. Any existing file that may already exist will be overwritten.

If --request-body is also enabled, the request log will include the entire body of any client requests.

Logs include the entire GooseRequestMetric object which also includes the entire GooseRawRequest object, both created for all client requests.

Log Format

By default, logs are written in JSON Lines format. For example (in this case with --request-body also enabled):

{"coordinated_omission_elapsed":0,"elapsed":13219,"error":"","final_url":"http://apache/misc/jquery-extend-3.4.0.js?v=1.4.4","name":"static asset","raw":{"body":"","headers":[],"method":"Get","url":"http://apache/misc/jquery-extend-3.4.0.js?v=1.4.4"},"redirected":false,"response_time":7,"status_code":200,"success":true,"update":false,"user":4,"user_cadence":0}

{"coordinated_omission_elapsed":0,"elapsed":13055,"error":"","final_url":"http://apache/node/1786#comment-114852","name":"(Auth) comment form","raw":{"body":"subject=this+is+a+test+comment+subject&comment_body%5Bund%5D%5B0%5D%5Bvalue%5D=this+is+a+test+comment+body&comment_body%5Bund%5D%5B0%5D%5Bformat%5D=filtered_html&form_build_id=form-U0L3wm2SsIKAhVhaHpxeL1TLUHW64DXKifmQeZsUsss&form_token=VKDel_jiYzjqPrekL1FrP2_4EqHTlsaqLjMUJ6pn-sE&form_id=comment_node_article_form&op=Save","headers":["(\"content-type\", \"application/x-www-form-urlencoded\")"],"method":"Post","url":"http://apache/comment/reply/1786"},"redirected":true,"response_time":172,"status_code":200,"success":true,"update":false,"user":1,"user_cadence":0}

{"coordinated_omission_elapsed":0,"elapsed":13219,"error":"","final_url":"http://apache/misc/drupal.js?q9apdy","name":"static asset","raw":{"body":"","headers":[],"method":"Get","url":"http://apache/misc/drupal.js?q9apdy"},"redirected":false,"response_time":7,"status_code":200,"success":true,"update":false,"user":0,"user_cadence":0}

The --request-format option can be used to log in csv, json (default), raw or pretty format. The raw format is Rust's debug output of the entire GooseRequestMetric object.

Gaggle Mode

When operating in Gaggle-mode, the --request-log option can only be enabled on the Worker processes, configuring Goose to spread out the overhead of writing logs.

Transaction Log

Goose can optionally log details about each time a transaction is run during a load test. To enable, add the --transaction-log <transaction.log> command line option, where <transaction.log> is either a relative or absolute path of the log file to create. Any existing file that may already exist will be overwritten.

Logs include the entire TransactionMetric object which is created each time any transaction is run.

Log Format

By default, logs are written in JSON Lines format. For example:

{"elapsed":22060,"name":"(Anon) front page","run_time":97,"success":true,"transaction_index":0,"scenario_index":0,"user":0}

{"elapsed":22118,"name":"(Anon) node page","run_time":41,"success":true,"transaction_index":1,"scenario_index":0,"user":5}

{"elapsed":22157,"name":"(Anon) node page","run_time":6,"success":true,"transaction_index":1,"scenario_index":0,"user":0}

{"elapsed":22078,"name":"(Auth) front page","run_time":109,"success":true,"transaction_index":1,"scenario_index":1,"user":6}

{"elapsed":22157,"name":"(Anon) user page","run_time":35,"success":true,"transaction_index":2,"scenario_index":0,"user":4}

In the first line of the above example, GooseUser thread 0 succesfully ran the (Anon) front page transaction in 97 milliseconds. In the second line GooseUser thread 5 succesfully ran the (Anon) node page transaction in 41 milliseconds.

The --transaction-format option can be used to log in csv, json (default), raw or pretty format. The raw format is Rust's debug output of the entire

TransactionMetric object.

For example, csv output of similar transactions as those logged above would like like:

elapsed,scenario_index,transaction_index,name,run_time,success,user

21936,0,0,"(Anon) front page",83,true,0

21990,1,3,"(Auth) user page",34,true,1

21954,0,0,"(Anon) front page",84,true,5

22009,0,1,"(Anon) node page",34,true,2

21952,0,0,"(Anon) front page",95,true,7

Gaggle Mode

When operating in Gaggle-mode, the --transaction-log option can only be enabled on the Worker processes, configuring Goose to spread out the overhead of writing logs.

Scneario Log

Goose can optionally log details about each time a scenario is run during a load test. To enable, add the --scenario-log <scenario.log> command line option, where <scenario.log> is either a relative or absolute path of the log file to create. Any existing file that may already exist will be overwritten.

Logs include the entire ScenarioMetric object which is created each time any scenario is run.

Log Format

By default, logs are written in JSON Lines format. For example:

{"elapsed":15751,"index":0,"name":"AnonBrowsingUser","run_time":1287,"user":7}

{"elapsed":15756,"index":0,"name":"AnonBrowsingUser","run_time":1308,"user":4}

{"elapsed":15760,"index":0,"name":"AnonBrowsingUser","run_time":1286,"user":9}

{"elapsed":15783,"index":0,"name":"AnonBrowsingUser","run_time":1301,"user":0}

{"elapsed":22802,"index":1,"name":"AuthBrowsingUser","run_time":13056,"user":8}

In the first line of the above example, GooseUser thread 7 ran the complete AnonBrowsingUser scenario in 1,287 milliseconds. In the fifth line GooseUser thread 8 succesfully ran the AuthBrowsingUser transaction in 13,056 milliseconds.

The --scenario-format option can be used to log in csv, json (default), raw or pretty format. The raw format is Rust's debug output of the entire

ScenarioMetric object.

For example, csv output of similar transactions as those logged above would like like:

elapsed,scenario_index,transaction_index,name,run_time,success,user

15751,AnonBrowsingUser,0,1287,7

15756,AnonBrowsingUser,0,1308,4

15760,AnonBrowsingUser,0,1286,9

15783,AnonBrowsingUser,0,1301,0

22802,AuthBrowsingUser,1,13056,8

Gaggle Mode

When operating in Gaggle-mode, the --scenario-log option can only be enabled on the Worker processes, configuring Goose to spread out the overhead of writing logs.

Error Log

Goose can optionally log details about all load test errors to a file. To enable, add the --error-log=<error.log> command line option, where <error.log> is either a relative or absolute path of the log file to create. Any existing file that may already exist will be overwritten.

Logs include the entire GooseErrorMetric object, created any time a request results in an error.

Log Format

By default, logs are written in JSON Lines format. For example:

{"elapsed":9318,"error":"503 Service Unavailable: /","final_url":"http://apache/","name":"(Auth) front page","raw":{"body":"","headers":[],"method":"Get","url":"http://apache/"},"redirected":false,"response_time":6,"status_code":503,"user":1}